Coursera

#@title Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# https://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

Train Your Own Model and Serve It With TensorFlow Serving

In this notebook, you will train a neural network to classify images of handwritten digits from the MNIST dataset. You will then save the trained model, and serve it using TensorFlow Serving.

Warning: This notebook is designed to be run in a Google Colab only. It installs packages on the system and requires root access. If you want to run it in a local Jupyter notebook, please proceed with caution.

Setup

try:

%tensorflow_version 2.x

except:

pass

Colab only includes TensorFlow 2.x; %tensorflow_version has no effect.

import os

import json

import tempfile

import requests

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

print("\u2022 Using TensorFlow Version:", tf.__version__)

• Using TensorFlow Version: 2.14.0

Import the MNIST Dataset

The MNIST dataset contains 70,000 grayscale images of the digits 0 through 9. The images show individual digits at a low resolution (28 by 28 pixels).

Even though these are really images, we will load them as NumPy arrays and not as binary image objects.

mnist = tf.keras.datasets.mnist

(train_images, train_labels), (test_images, test_labels) = mnist.load_data()

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/mnist.npz

11490434/11490434 [==============================] - 2s 0us/step

# EXERCISE: Scale the values of the arrays below to be between 0.0 and 1.0.

train_images = train_images / 255.

test_images = test_images / 255.

In the cell below use the .reshape method to resize the arrays to the following sizes:

train_images.shape: (60000, 28, 28, 1)

test_images.shape: (10000, 28, 28, 1)

# EXERCISE: Reshape the arrays below.

train_images = train_images.reshape(train_images.shape[0], 28, 28, 1)

test_images = test_images.reshape(test_images.shape[0], 28, 28, 1)

print('\ntrain_images.shape: {}, of {}'.format(train_images.shape, train_images.dtype))

print('test_images.shape: {}, of {}'.format(test_images.shape, test_images.dtype))

train_images.shape: (60000, 28, 28, 1), of float64

test_images.shape: (10000, 28, 28, 1), of float64

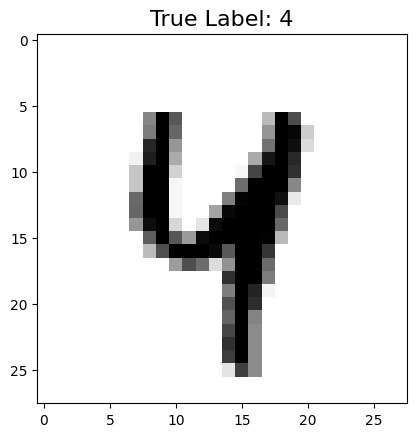

Look at a Sample Image

idx = 42

plt.imshow(test_images[idx].reshape(28,28), cmap=plt.cm.binary)

plt.title('True Label: {}'.format(test_labels[idx]), fontdict={'size': 16})

plt.show()

Build a Model

In the cell below build a tf.keras.Sequential model that can be used to classify the images of the MNIST dataset. Feel free to use the simplest possible CNN. Make sure your model has the correct input_shape and the correct number of output units.

# EXERCISE: Create a model.

model = tf.keras.Sequential([

tf.keras.layers.Conv2D(input_shape=(28,28,1), filters=8, kernel_size=3,

strides=2, activation='relu', name='Conv1'),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(10, activation=tf.nn.softmax, name='Softmax')

])

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

Conv1 (Conv2D) (None, 13, 13, 8) 80

flatten (Flatten) (None, 1352) 0

Softmax (Dense) (None, 10) 13530

=================================================================

Total params: 13610 (53.16 KB)

Trainable params: 13610 (53.16 KB)

Non-trainable params: 0 (0.00 Byte)

_________________________________________________________________

Train the Model

In the cell below configure your model for training using the adam optimizer, sparse_categorical_crossentropy as the loss, and accuracy for your metrics. Then train the model for the given number of epochs, using the train_images array.

# EXERCISE: Configure the model for training.

model.compile(optimizer="adam",

loss="sparse_categorical_crossentropy",

metrics=["accuracy"])

epochs = 5

# EXERCISE: Train the model.

history = model.fit(train_images, train_labels, epochs=epochs)

Epoch 1/5

1875/1875 [==============================] - 20s 5ms/step - loss: 0.3630 - accuracy: 0.8996

Epoch 2/5

1875/1875 [==============================] - 5s 3ms/step - loss: 0.2050 - accuracy: 0.9423

Epoch 3/5

1875/1875 [==============================] - 6s 3ms/step - loss: 0.1609 - accuracy: 0.9545

Epoch 4/5

1875/1875 [==============================] - 5s 3ms/step - loss: 0.1326 - accuracy: 0.9620

Epoch 5/5

1875/1875 [==============================] - 6s 3ms/step - loss: 0.1161 - accuracy: 0.9664

Evaluate the Model

# EXERCISE: Evaluate the model on the test images.

results_eval = model.evaluate(test_images, test_labels, verbose=0)

for metric, value in zip(model.metrics_names, results_eval):

print(metric + ': {:.3}'.format(value))

loss: 0.108

accuracy: 0.968

Save the Model

MODEL_DIR = tempfile.gettempdir()

version = 1

export_path = os.path.join(MODEL_DIR, str(version))

if os.path.isdir(export_path):

print('\nAlready saved a model, cleaning up\n')

!rm -r {export_path}

model.save(export_path, save_format="tf")

print('\nexport_path = {}'.format(export_path))

!ls -l {export_path}

export_path = /tmp/1

total 104

drwxr-xr-x 2 root root 4096 Oct 27 15:27 assets

-rw-r--r-- 1 root root 55 Oct 27 15:27 fingerprint.pb

-rw-r--r-- 1 root root 8612 Oct 27 15:27 keras_metadata.pb

-rw-r--r-- 1 root root 78782 Oct 27 15:27 saved_model.pb

drwxr-xr-x 2 root root 4096 Oct 27 15:27 variables

Examine Your Saved Model

!saved_model_cli show --dir {export_path} --all

2023-10-27 15:27:47.879695: E tensorflow/compiler/xla/stream_executor/cuda/cuda_dnn.cc:9342] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered

2023-10-27 15:27:47.879758: E tensorflow/compiler/xla/stream_executor/cuda/cuda_fft.cc:609] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered

2023-10-27 15:27:47.879815: E tensorflow/compiler/xla/stream_executor/cuda/cuda_blas.cc:1518] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered

2023-10-27 15:27:48.948250: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Could not find TensorRT

MetaGraphDef with tag-set: 'serve' contains the following SignatureDefs:

signature_def['__saved_model_init_op']:

The given SavedModel SignatureDef contains the following input(s):

The given SavedModel SignatureDef contains the following output(s):

outputs['__saved_model_init_op'] tensor_info:

dtype: DT_INVALID

shape: unknown_rank

name: NoOp

Method name is:

signature_def['serving_default']:

The given SavedModel SignatureDef contains the following input(s):

inputs['Conv1_input'] tensor_info:

dtype: DT_FLOAT

shape: (-1, 28, 28, 1)

name: serving_default_Conv1_input:0

The given SavedModel SignatureDef contains the following output(s):

outputs['Softmax'] tensor_info:

dtype: DT_FLOAT

shape: (-1, 10)

name: StatefulPartitionedCall:0

Method name is: tensorflow/serving/predict

The MetaGraph with tag set ['serve'] contains the following ops: {'NoOp', 'BiasAdd', 'Conv2D', 'Relu', 'AssignVariableOp', 'DisableCopyOnRead', 'ShardedFilename', 'SaveV2', 'Reshape', 'StringJoin', 'MergeV2Checkpoints', 'Const', 'MatMul', 'StaticRegexFullMatch', 'Pack', 'ReadVariableOp', 'Select', 'Placeholder', 'VarHandleOp', 'Identity', 'StatefulPartitionedCall', 'RestoreV2', 'Softmax'}

2023-10-27 15:27:52.067908: W tensorflow/core/common_runtime/gpu/gpu_bfc_allocator.cc:47] Overriding orig_value setting because the TF_FORCE_GPU_ALLOW_GROWTH environment variable is set. Original config value was 0.

Concrete Functions:

Function Name: '__call__'

Option #1

Callable with:

Argument #1

Conv1_input: TensorSpec(shape=(None, 28, 28, 1), dtype=tf.float32, name='Conv1_input')

Argument #2

DType: bool

Value: True

Argument #3

DType: NoneType

Value: None

Option #2

Callable with:

Argument #1

Conv1_input: TensorSpec(shape=(None, 28, 28, 1), dtype=tf.float32, name='Conv1_input')

Argument #2

DType: bool

Value: False

Argument #3

DType: NoneType

Value: None

Function Name: '_default_save_signature'

Option #1

Callable with:

Argument #1

Conv1_input: TensorSpec(shape=(None, 28, 28, 1), dtype=tf.float32, name='Conv1_input')

Function Name: 'call_and_return_all_conditional_losses'

Option #1

Callable with:

Argument #1

Conv1_input: TensorSpec(shape=(None, 28, 28, 1), dtype=tf.float32, name='Conv1_input')

Argument #2

DType: bool

Value: True

Argument #3

DType: NoneType

Value: None

Option #2

Callable with:

Argument #1

Conv1_input: TensorSpec(shape=(None, 28, 28, 1), dtype=tf.float32, name='Conv1_input')

Argument #2

DType: bool

Value: False

Argument #3

DType: NoneType

Value: None

Add TensorFlow Serving Distribution URI as a Package Source

# This is the same as you would do from your command line, but without the [arch=amd64], and no sudo

# You would instead do:

# echo "deb [arch=amd64] http://storage.googleapis.com/tensorflow-serving-apt stable tensorflow-model-server tensorflow-model-server-universal" | sudo tee /etc/apt/sources.list.d/tensorflow-serving.list && \

# curl https://storage.googleapis.com/tensorflow-serving-apt/tensorflow-serving.release.pub.gpg | sudo apt-key add -

!echo "deb http://storage.googleapis.com/tensorflow-serving-apt stable tensorflow-model-server tensorflow-model-server-universal" | tee /etc/apt/sources.list.d/tensorflow-serving.list && \

curl https://storage.googleapis.com/tensorflow-serving-apt/tensorflow-serving.release.pub.gpg | apt-key add -

!apt update

deb http://storage.googleapis.com/tensorflow-serving-apt stable tensorflow-model-server tensorflow-model-server-universal

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0Warning: apt-key is deprecated. Manage keyring files in trusted.gpg.d instead (see apt-key(8)).

100 2943 100 2943 0 0 8810 0 --:--:-- --:--:-- --:--:-- 8811

OK

Ign:1 https://cloud.r-project.org/bin/linux/ubuntu jammy-cran40/ InRelease

Hit:2 https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2204/x86_64 InRelease

Get:3 http://storage.googleapis.com/tensorflow-serving-apt stable InRelease [3,026 B]

Hit:4 http://archive.ubuntu.com/ubuntu jammy InRelease

Get:5 http://security.ubuntu.com/ubuntu jammy-security InRelease [110 kB]

Get:6 http://archive.ubuntu.com/ubuntu jammy-updates InRelease [119 kB]

Get:7 https://ppa.launchpadcontent.net/c2d4u.team/c2d4u4.0+/ubuntu jammy InRelease [18.1 kB]

Ign:1 https://cloud.r-project.org/bin/linux/ubuntu jammy-cran40/ InRelease

Get:8 http://storage.googleapis.com/tensorflow-serving-apt stable/tensorflow-model-server amd64 Packages [340 B]

Get:9 http://storage.googleapis.com/tensorflow-serving-apt stable/tensorflow-model-server-universal amd64 Packages [348 B]

Get:10 http://security.ubuntu.com/ubuntu jammy-security/main amd64 Packages [1,131 kB]

Get:11 http://archive.ubuntu.com/ubuntu jammy-backports InRelease [109 kB]

Hit:12 https://ppa.launchpadcontent.net/deadsnakes/ppa/ubuntu jammy InRelease

Get:13 http://archive.ubuntu.com/ubuntu jammy-updates/universe amd64 Packages [1,274 kB]

Get:14 http://security.ubuntu.com/ubuntu jammy-security/universe amd64 Packages [1,009 kB]

Hit:15 https://ppa.launchpadcontent.net/graphics-drivers/ppa/ubuntu jammy InRelease

Get:16 http://archive.ubuntu.com/ubuntu jammy-updates/main amd64 Packages [1,400 kB]

Ign:1 https://cloud.r-project.org/bin/linux/ubuntu jammy-cran40/ InRelease

Hit:17 https://ppa.launchpadcontent.net/ubuntugis/ppa/ubuntu jammy InRelease

Get:18 https://ppa.launchpadcontent.net/c2d4u.team/c2d4u4.0+/ubuntu jammy/main Sources [2,230 kB]

Get:19 https://ppa.launchpadcontent.net/c2d4u.team/c2d4u4.0+/ubuntu jammy/main amd64 Packages [1,145 kB]

Err:1 https://cloud.r-project.org/bin/linux/ubuntu jammy-cran40/ InRelease

Could not resolve 'cloud.r-project.org'

Fetched 8,548 kB in 8s (1,074 kB/s)

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

27 packages can be upgraded. Run 'apt list --upgradable' to see them.

[1;33mW: [0mhttp://storage.googleapis.com/tensorflow-serving-apt/dists/stable/InRelease: Key is stored in legacy trusted.gpg keyring (/etc/apt/trusted.gpg), see the DEPRECATION section in apt-key(8) for details.[0m

[1;33mW: [0mFailed to fetch https://cloud.r-project.org/bin/linux/ubuntu/jammy-cran40/InRelease Could not resolve 'cloud.r-project.org'[0m

[1;33mW: [0mSome index files failed to download. They have been ignored, or old ones used instead.[0m

Install TensorFlow Serving

!apt-get install tensorflow-model-server

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

The following NEW packages will be installed:

tensorflow-model-server

0 upgraded, 1 newly installed, 0 to remove and 27 not upgraded.

Need to get 463 MB of archives.

After this operation, 0 B of additional disk space will be used.

Get:1 http://storage.googleapis.com/tensorflow-serving-apt stable/tensorflow-model-server amd64 tensorflow-model-server all 2.14.0 [463 MB]

Fetched 463 MB in 28s (16.6 MB/s)

Selecting previously unselected package tensorflow-model-server.

(Reading database ... 120874 files and directories currently installed.)

Preparing to unpack .../tensorflow-model-server_2.14.0_all.deb ...

Unpacking tensorflow-model-server (2.14.0) ...

Setting up tensorflow-model-server (2.14.0) ...

Run the TensorFlow Model Server

You will now launch the TensorFlow model server with a bash script. In the cell below use the following parameters when running the TensorFlow model server:

-

rest_api_port: Use port8501for your requests. -

model_name: Usedigits_modelas your model name. -

model_base_path: Use the environment variableMODEL_DIRdefined below as the base path to the saved model.

os.environ["MODEL_DIR"] = MODEL_DIR

# EXERCISE: Fill in the missing code below.

%%bash --bg

nohup tensorflow_model_server \

--rest_api_port=8501

--model_name=digits_model

--model_base_path="${MODEL_DIR}" >server.log 2>&1

!tail server.log

tail: cannot open 'server.log' for reading: No such file or directory

Create JSON Object with Test Images

In the cell below construct a JSON object and use the first three images of the testing set (test_images) as your data.

# EXERCISE: Create JSON Object

data = json.dumps({"signature_name": "serving_default", "instances": test_images[0:3].tolist()})

Make Inference Request

In the cell below, send a predict request as a POST to the server’s REST endpoint, and pass it your test data. You should ask the server to give you the latest version of your model.

# EXERCISE: Fill in the code below

headers = {"content-type": "application/json"}

json_response = requests.post(

'http://192.168.1.1:8501/v1/models/digits_model:predict',

data=data,

headers=headers

)

predictions = json.loads(json_response.text)['predictions']

---------------------------------------------------------------------------

TimeoutError Traceback (most recent call last)

/usr/local/lib/python3.10/dist-packages/urllib3/connection.py in _new_conn(self)

202 try:

--> 203 sock = connection.create_connection(

204 (self._dns_host, self.port),

/usr/local/lib/python3.10/dist-packages/urllib3/util/connection.py in create_connection(address, timeout, source_address, socket_options)

84 try:

---> 85 raise err

86 finally:

/usr/local/lib/python3.10/dist-packages/urllib3/util/connection.py in create_connection(address, timeout, source_address, socket_options)

72 sock.bind(source_address)

---> 73 sock.connect(sa)

74 # Break explicitly a reference cycle

TimeoutError: [Errno 110] Connection timed out

The above exception was the direct cause of the following exception:

ConnectTimeoutError Traceback (most recent call last)

/usr/local/lib/python3.10/dist-packages/urllib3/connectionpool.py in urlopen(self, method, url, body, headers, retries, redirect, assert_same_host, timeout, pool_timeout, release_conn, chunked, body_pos, preload_content, decode_content, **response_kw)

790 # Make the request on the HTTPConnection object

--> 791 response = self._make_request(

792 conn,

/usr/local/lib/python3.10/dist-packages/urllib3/connectionpool.py in _make_request(self, conn, method, url, body, headers, retries, timeout, chunked, response_conn, preload_content, decode_content, enforce_content_length)

496 try:

--> 497 conn.request(

498 method,

/usr/local/lib/python3.10/dist-packages/urllib3/connection.py in request(self, method, url, body, headers, chunked, preload_content, decode_content, enforce_content_length)

394 self.putheader(header, value)

--> 395 self.endheaders()

396

/usr/lib/python3.10/http/client.py in endheaders(self, message_body, encode_chunked)

1277 raise CannotSendHeader()

-> 1278 self._send_output(message_body, encode_chunked=encode_chunked)

1279

/usr/lib/python3.10/http/client.py in _send_output(self, message_body, encode_chunked)

1037 del self._buffer[:]

-> 1038 self.send(msg)

1039

/usr/lib/python3.10/http/client.py in send(self, data)

975 if self.auto_open:

--> 976 self.connect()

977 else:

/usr/local/lib/python3.10/dist-packages/urllib3/connection.py in connect(self)

242 def connect(self) -> None:

--> 243 self.sock = self._new_conn()

244 if self._tunnel_host:

/usr/local/lib/python3.10/dist-packages/urllib3/connection.py in _new_conn(self)

211 except SocketTimeout as e:

--> 212 raise ConnectTimeoutError(

213 self,

ConnectTimeoutError: (<urllib3.connection.HTTPConnection object at 0x7fe8d01f9960>, 'Connection to 192.168.1.1 timed out. (connect timeout=None)')

The above exception was the direct cause of the following exception:

MaxRetryError Traceback (most recent call last)

/usr/local/lib/python3.10/dist-packages/requests/adapters.py in send(self, request, stream, timeout, verify, cert, proxies)

485 try:

--> 486 resp = conn.urlopen(

487 method=request.method,

/usr/local/lib/python3.10/dist-packages/urllib3/connectionpool.py in urlopen(self, method, url, body, headers, retries, redirect, assert_same_host, timeout, pool_timeout, release_conn, chunked, body_pos, preload_content, decode_content, **response_kw)

844

--> 845 retries = retries.increment(

846 method, url, error=new_e, _pool=self, _stacktrace=sys.exc_info()[2]

/usr/local/lib/python3.10/dist-packages/urllib3/util/retry.py in increment(self, method, url, response, error, _pool, _stacktrace)

514 reason = error or ResponseError(cause)

--> 515 raise MaxRetryError(_pool, url, reason) from reason # type: ignore[arg-type]

516

MaxRetryError: HTTPConnectionPool(host='192.168.1.1', port=8501): Max retries exceeded with url: /v1/models/digits_model:predict (Caused by ConnectTimeoutError(<urllib3.connection.HTTPConnection object at 0x7fe8d01f9960>, 'Connection to 192.168.1.1 timed out. (connect timeout=None)'))

During handling of the above exception, another exception occurred:

ConnectTimeout Traceback (most recent call last)

<ipython-input-20-fb6225678c6d> in <cell line: 3>()

1 # EXERCISE: Fill in the code below

2 headers = {"content-type": "application/json"}

----> 3 json_response = requests.post(

4 'http://192.168.1.1:8501/v1/models/digits_model:predict',

5 data=data,

/usr/local/lib/python3.10/dist-packages/requests/api.py in post(url, data, json, **kwargs)

113 """

114

--> 115 return request("post", url, data=data, json=json, **kwargs)

116

117

/usr/local/lib/python3.10/dist-packages/requests/api.py in request(method, url, **kwargs)

57 # cases, and look like a memory leak in others.

58 with sessions.Session() as session:

---> 59 return session.request(method=method, url=url, **kwargs)

60

61

/usr/local/lib/python3.10/dist-packages/requests/sessions.py in request(self, method, url, params, data, headers, cookies, files, auth, timeout, allow_redirects, proxies, hooks, stream, verify, cert, json)

587 }

588 send_kwargs.update(settings)

--> 589 resp = self.send(prep, **send_kwargs)

590

591 return resp

/usr/local/lib/python3.10/dist-packages/requests/sessions.py in send(self, request, **kwargs)

701

702 # Send the request

--> 703 r = adapter.send(request, **kwargs)

704

705 # Total elapsed time of the request (approximately)

/usr/local/lib/python3.10/dist-packages/requests/adapters.py in send(self, request, stream, timeout, verify, cert, proxies)

505 # TODO: Remove this in 3.0.0: see #2811

506 if not isinstance(e.reason, NewConnectionError):

--> 507 raise ConnectTimeout(e, request=request)

508

509 if isinstance(e.reason, ResponseError):

ConnectTimeout: HTTPConnectionPool(host='192.168.1.1', port=8501): Max retries exceeded with url: /v1/models/digits_model:predict (Caused by ConnectTimeoutError(<urllib3.connection.HTTPConnection object at 0x7fe8d01f9960>, 'Connection to 192.168.1.1 timed out. (connect timeout=None)'))

Plot Predictions

plt.figure(figsize=(10,15))

for i in range(3):

plt.subplot(1,3,i+1)

plt.imshow(test_images[i].reshape(28,28), cmap = plt.cm.binary)

plt.axis('off')

color = 'green' if np.argmax(predictions[i]) == test_labels[i] else 'red'

plt.title('Prediction: {}\nTrue Label: {}'.format(np.argmax(predictions[i]), test_labels[i]), color=color)

plt.show()