Coursera

Week 1 Assignment: Neural Style Transfer

Welcome to the first programming assignment of this course! Here, you will be implementing neural style transfer using the Inception model as your feature extractor. This is very similar to the Neural Style Transfer ungraded lab so if you get stuck, remember to review the said notebook for tips.

Important: This colab notebook has read-only access so you won’t be able to save your changes. If you want to save your work periodically, please click File -> Save a Copy in Drive to create a copy in your account, then work from there.

Imports

try:

# %tensorflow_version only exists in Colab.

%tensorflow_version 2.x

except Exception:

pass

import tensorflow as tf

import matplotlib.pyplot as plt

import numpy as np

from keras import backend as K

from imageio import mimsave

from IPython.display import display as display_fn

from IPython.display import Image, clear_output

Colab only includes TensorFlow 2.x; %tensorflow_version has no effect.

Utilities

As before, we’ve provided some utility functions below to help in loading, visualizing, and preprocessing the images.

def tensor_to_image(tensor):

'''converts a tensor to an image'''

tensor_shape = tf.shape(tensor)

number_elem_shape = tf.shape(tensor_shape)

if number_elem_shape > 3:

assert tensor_shape[0] == 1

tensor = tensor[0]

return tf.keras.preprocessing.image.array_to_img(tensor)

def load_img(path_to_img):

'''loads an image as a tensor and scales it to 512 pixels'''

max_dim = 512

image = tf.io.read_file(path_to_img)

image = tf.image.decode_jpeg(image)

image = tf.image.convert_image_dtype(image, tf.float32)

shape = tf.shape(image)[:-1]

shape = tf.cast(tf.shape(image)[:-1], tf.float32)

long_dim = max(shape)

scale = max_dim / long_dim

new_shape = tf.cast(shape * scale, tf.int32)

image = tf.image.resize(image, new_shape)

image = image[tf.newaxis, :]

image = tf.image.convert_image_dtype(image, tf.uint8)

return image

def load_images(content_path, style_path):

'''loads the content and path images as tensors'''

content_image = load_img("{}".format(content_path))

style_image = load_img("{}".format(style_path))

return content_image, style_image

def imshow(image, title=None):

'''displays an image with a corresponding title'''

if len(image.shape) > 3:

image = tf.squeeze(image, axis=0)

plt.imshow(image)

if title:

plt.title(title)

def show_images_with_objects(images, titles=[]):

'''displays a row of images with corresponding titles'''

if len(images) != len(titles):

return

plt.figure(figsize=(20, 12))

for idx, (image, title) in enumerate(zip(images, titles)):

plt.subplot(1, len(images), idx + 1)

plt.xticks([])

plt.yticks([])

imshow(image, title)

def clip_image_values(image, min_value=0.0, max_value=255.0):

'''clips the image pixel values by the given min and max'''

return tf.clip_by_value(image, clip_value_min=min_value, clip_value_max=max_value)

def preprocess_image(image):

'''preprocesses a given image to use with Inception model'''

image = tf.cast(image, dtype=tf.float32)

image = (image / 127.5) - 1.0

return image

Download Images

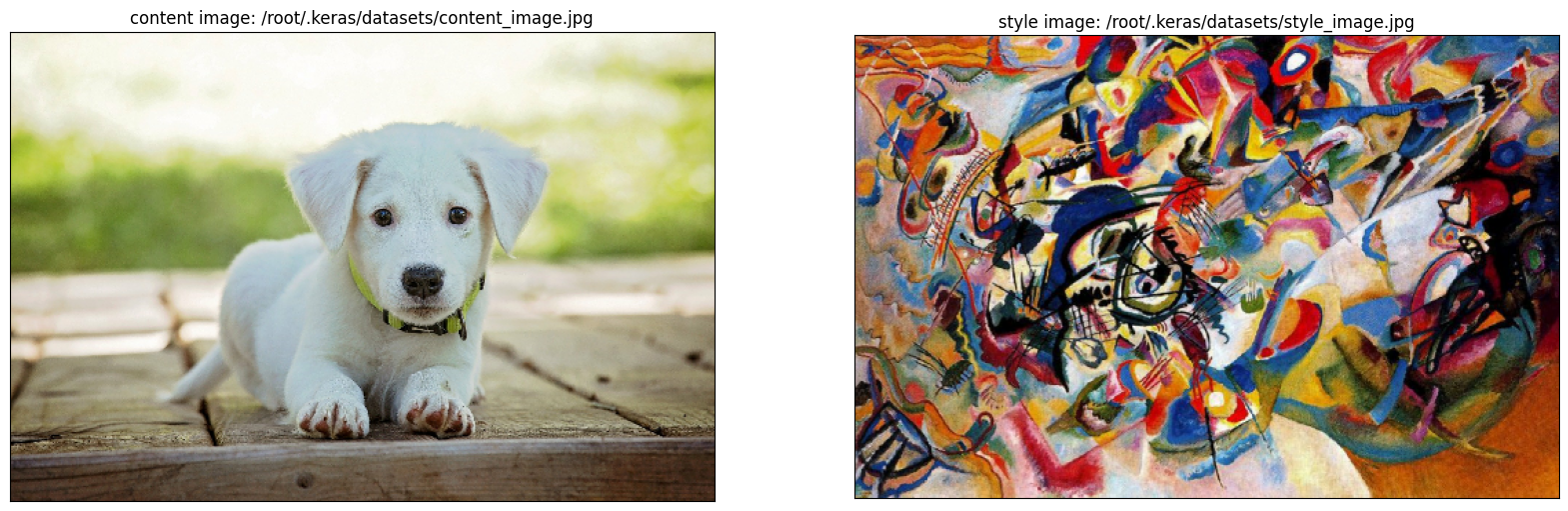

You will fetch the two images you will use for the content and style image.

content_path = tf.keras.utils.get_file('content_image.jpg','https://storage.googleapis.com/tensorflow-1-public/tensorflow-3-temp/MLColabImages/dog1.jpeg')

style_path = tf.keras.utils.get_file('style_image.jpg','https://storage.googleapis.com/download.tensorflow.org/example_images/Vassily_Kandinsky%2C_1913_-_Composition_7.jpg')

# display the content and style image

content_image, style_image = load_images(content_path, style_path)

show_images_with_objects([content_image, style_image],

titles=[f'content image: {content_path}',

f'style image: {style_path}'])

Build the feature extractor

Next, you will inspect the layers of the Inception model.

# clear session to make layer naming consistent when re-running this cell

K.clear_session()

# download the inception model and inspect the layers

tmp_inception = tf.keras.applications.InceptionV3()

tmp_inception.summary()

# delete temporary model

del tmp_inception

Model: "inception_v3"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 299, 299, 3)] 0 []

conv2d (Conv2D) (None, 149, 149, 32) 864 ['input_1[0][0]']

batch_normalization (Batch (None, 149, 149, 32) 96 ['conv2d[0][0]']

Normalization)

activation (Activation) (None, 149, 149, 32) 0 ['batch_normalization[0][0]']

conv2d_1 (Conv2D) (None, 147, 147, 32) 9216 ['activation[0][0]']

batch_normalization_1 (Bat (None, 147, 147, 32) 96 ['conv2d_1[0][0]']

chNormalization)

activation_1 (Activation) (None, 147, 147, 32) 0 ['batch_normalization_1[0][0]'

]

conv2d_2 (Conv2D) (None, 147, 147, 64) 18432 ['activation_1[0][0]']

batch_normalization_2 (Bat (None, 147, 147, 64) 192 ['conv2d_2[0][0]']

chNormalization)

activation_2 (Activation) (None, 147, 147, 64) 0 ['batch_normalization_2[0][0]'

]

max_pooling2d (MaxPooling2 (None, 73, 73, 64) 0 ['activation_2[0][0]']

D)

conv2d_3 (Conv2D) (None, 73, 73, 80) 5120 ['max_pooling2d[0][0]']

batch_normalization_3 (Bat (None, 73, 73, 80) 240 ['conv2d_3[0][0]']

chNormalization)

activation_3 (Activation) (None, 73, 73, 80) 0 ['batch_normalization_3[0][0]'

]

conv2d_4 (Conv2D) (None, 71, 71, 192) 138240 ['activation_3[0][0]']

batch_normalization_4 (Bat (None, 71, 71, 192) 576 ['conv2d_4[0][0]']

chNormalization)

activation_4 (Activation) (None, 71, 71, 192) 0 ['batch_normalization_4[0][0]'

]

max_pooling2d_1 (MaxPoolin (None, 35, 35, 192) 0 ['activation_4[0][0]']

g2D)

conv2d_8 (Conv2D) (None, 35, 35, 64) 12288 ['max_pooling2d_1[0][0]']

batch_normalization_8 (Bat (None, 35, 35, 64) 192 ['conv2d_8[0][0]']

chNormalization)

activation_8 (Activation) (None, 35, 35, 64) 0 ['batch_normalization_8[0][0]'

]

conv2d_6 (Conv2D) (None, 35, 35, 48) 9216 ['max_pooling2d_1[0][0]']

conv2d_9 (Conv2D) (None, 35, 35, 96) 55296 ['activation_8[0][0]']

batch_normalization_6 (Bat (None, 35, 35, 48) 144 ['conv2d_6[0][0]']

chNormalization)

batch_normalization_9 (Bat (None, 35, 35, 96) 288 ['conv2d_9[0][0]']

chNormalization)

activation_6 (Activation) (None, 35, 35, 48) 0 ['batch_normalization_6[0][0]'

]

activation_9 (Activation) (None, 35, 35, 96) 0 ['batch_normalization_9[0][0]'

]

average_pooling2d (Average (None, 35, 35, 192) 0 ['max_pooling2d_1[0][0]']

Pooling2D)

conv2d_5 (Conv2D) (None, 35, 35, 64) 12288 ['max_pooling2d_1[0][0]']

conv2d_7 (Conv2D) (None, 35, 35, 64) 76800 ['activation_6[0][0]']

conv2d_10 (Conv2D) (None, 35, 35, 96) 82944 ['activation_9[0][0]']

conv2d_11 (Conv2D) (None, 35, 35, 32) 6144 ['average_pooling2d[0][0]']

batch_normalization_5 (Bat (None, 35, 35, 64) 192 ['conv2d_5[0][0]']

chNormalization)

batch_normalization_7 (Bat (None, 35, 35, 64) 192 ['conv2d_7[0][0]']

chNormalization)

batch_normalization_10 (Ba (None, 35, 35, 96) 288 ['conv2d_10[0][0]']

tchNormalization)

batch_normalization_11 (Ba (None, 35, 35, 32) 96 ['conv2d_11[0][0]']

tchNormalization)

activation_5 (Activation) (None, 35, 35, 64) 0 ['batch_normalization_5[0][0]'

]

activation_7 (Activation) (None, 35, 35, 64) 0 ['batch_normalization_7[0][0]'

]

activation_10 (Activation) (None, 35, 35, 96) 0 ['batch_normalization_10[0][0]

']

activation_11 (Activation) (None, 35, 35, 32) 0 ['batch_normalization_11[0][0]

']

mixed0 (Concatenate) (None, 35, 35, 256) 0 ['activation_5[0][0]',

'activation_7[0][0]',

'activation_10[0][0]',

'activation_11[0][0]']

conv2d_15 (Conv2D) (None, 35, 35, 64) 16384 ['mixed0[0][0]']

batch_normalization_15 (Ba (None, 35, 35, 64) 192 ['conv2d_15[0][0]']

tchNormalization)

activation_15 (Activation) (None, 35, 35, 64) 0 ['batch_normalization_15[0][0]

']

conv2d_13 (Conv2D) (None, 35, 35, 48) 12288 ['mixed0[0][0]']

conv2d_16 (Conv2D) (None, 35, 35, 96) 55296 ['activation_15[0][0]']

batch_normalization_13 (Ba (None, 35, 35, 48) 144 ['conv2d_13[0][0]']

tchNormalization)

batch_normalization_16 (Ba (None, 35, 35, 96) 288 ['conv2d_16[0][0]']

tchNormalization)

activation_13 (Activation) (None, 35, 35, 48) 0 ['batch_normalization_13[0][0]

']

activation_16 (Activation) (None, 35, 35, 96) 0 ['batch_normalization_16[0][0]

']

average_pooling2d_1 (Avera (None, 35, 35, 256) 0 ['mixed0[0][0]']

gePooling2D)

conv2d_12 (Conv2D) (None, 35, 35, 64) 16384 ['mixed0[0][0]']

conv2d_14 (Conv2D) (None, 35, 35, 64) 76800 ['activation_13[0][0]']

conv2d_17 (Conv2D) (None, 35, 35, 96) 82944 ['activation_16[0][0]']

conv2d_18 (Conv2D) (None, 35, 35, 64) 16384 ['average_pooling2d_1[0][0]']

batch_normalization_12 (Ba (None, 35, 35, 64) 192 ['conv2d_12[0][0]']

tchNormalization)

batch_normalization_14 (Ba (None, 35, 35, 64) 192 ['conv2d_14[0][0]']

tchNormalization)

batch_normalization_17 (Ba (None, 35, 35, 96) 288 ['conv2d_17[0][0]']

tchNormalization)

batch_normalization_18 (Ba (None, 35, 35, 64) 192 ['conv2d_18[0][0]']

tchNormalization)

activation_12 (Activation) (None, 35, 35, 64) 0 ['batch_normalization_12[0][0]

']

activation_14 (Activation) (None, 35, 35, 64) 0 ['batch_normalization_14[0][0]

']

activation_17 (Activation) (None, 35, 35, 96) 0 ['batch_normalization_17[0][0]

']

activation_18 (Activation) (None, 35, 35, 64) 0 ['batch_normalization_18[0][0]

']

mixed1 (Concatenate) (None, 35, 35, 288) 0 ['activation_12[0][0]',

'activation_14[0][0]',

'activation_17[0][0]',

'activation_18[0][0]']

conv2d_22 (Conv2D) (None, 35, 35, 64) 18432 ['mixed1[0][0]']

batch_normalization_22 (Ba (None, 35, 35, 64) 192 ['conv2d_22[0][0]']

tchNormalization)

activation_22 (Activation) (None, 35, 35, 64) 0 ['batch_normalization_22[0][0]

']

conv2d_20 (Conv2D) (None, 35, 35, 48) 13824 ['mixed1[0][0]']

conv2d_23 (Conv2D) (None, 35, 35, 96) 55296 ['activation_22[0][0]']

batch_normalization_20 (Ba (None, 35, 35, 48) 144 ['conv2d_20[0][0]']

tchNormalization)

batch_normalization_23 (Ba (None, 35, 35, 96) 288 ['conv2d_23[0][0]']

tchNormalization)

activation_20 (Activation) (None, 35, 35, 48) 0 ['batch_normalization_20[0][0]

']

activation_23 (Activation) (None, 35, 35, 96) 0 ['batch_normalization_23[0][0]

']

average_pooling2d_2 (Avera (None, 35, 35, 288) 0 ['mixed1[0][0]']

gePooling2D)

conv2d_19 (Conv2D) (None, 35, 35, 64) 18432 ['mixed1[0][0]']

conv2d_21 (Conv2D) (None, 35, 35, 64) 76800 ['activation_20[0][0]']

conv2d_24 (Conv2D) (None, 35, 35, 96) 82944 ['activation_23[0][0]']

conv2d_25 (Conv2D) (None, 35, 35, 64) 18432 ['average_pooling2d_2[0][0]']

batch_normalization_19 (Ba (None, 35, 35, 64) 192 ['conv2d_19[0][0]']

tchNormalization)

batch_normalization_21 (Ba (None, 35, 35, 64) 192 ['conv2d_21[0][0]']

tchNormalization)

batch_normalization_24 (Ba (None, 35, 35, 96) 288 ['conv2d_24[0][0]']

tchNormalization)

batch_normalization_25 (Ba (None, 35, 35, 64) 192 ['conv2d_25[0][0]']

tchNormalization)

activation_19 (Activation) (None, 35, 35, 64) 0 ['batch_normalization_19[0][0]

']

activation_21 (Activation) (None, 35, 35, 64) 0 ['batch_normalization_21[0][0]

']

activation_24 (Activation) (None, 35, 35, 96) 0 ['batch_normalization_24[0][0]

']

activation_25 (Activation) (None, 35, 35, 64) 0 ['batch_normalization_25[0][0]

']

mixed2 (Concatenate) (None, 35, 35, 288) 0 ['activation_19[0][0]',

'activation_21[0][0]',

'activation_24[0][0]',

'activation_25[0][0]']

conv2d_27 (Conv2D) (None, 35, 35, 64) 18432 ['mixed2[0][0]']

batch_normalization_27 (Ba (None, 35, 35, 64) 192 ['conv2d_27[0][0]']

tchNormalization)

activation_27 (Activation) (None, 35, 35, 64) 0 ['batch_normalization_27[0][0]

']

conv2d_28 (Conv2D) (None, 35, 35, 96) 55296 ['activation_27[0][0]']

batch_normalization_28 (Ba (None, 35, 35, 96) 288 ['conv2d_28[0][0]']

tchNormalization)

activation_28 (Activation) (None, 35, 35, 96) 0 ['batch_normalization_28[0][0]

']

conv2d_26 (Conv2D) (None, 17, 17, 384) 995328 ['mixed2[0][0]']

conv2d_29 (Conv2D) (None, 17, 17, 96) 82944 ['activation_28[0][0]']

batch_normalization_26 (Ba (None, 17, 17, 384) 1152 ['conv2d_26[0][0]']

tchNormalization)

batch_normalization_29 (Ba (None, 17, 17, 96) 288 ['conv2d_29[0][0]']

tchNormalization)

activation_26 (Activation) (None, 17, 17, 384) 0 ['batch_normalization_26[0][0]

']

activation_29 (Activation) (None, 17, 17, 96) 0 ['batch_normalization_29[0][0]

']

max_pooling2d_2 (MaxPoolin (None, 17, 17, 288) 0 ['mixed2[0][0]']

g2D)

mixed3 (Concatenate) (None, 17, 17, 768) 0 ['activation_26[0][0]',

'activation_29[0][0]',

'max_pooling2d_2[0][0]']

conv2d_34 (Conv2D) (None, 17, 17, 128) 98304 ['mixed3[0][0]']

batch_normalization_34 (Ba (None, 17, 17, 128) 384 ['conv2d_34[0][0]']

tchNormalization)

activation_34 (Activation) (None, 17, 17, 128) 0 ['batch_normalization_34[0][0]

']

conv2d_35 (Conv2D) (None, 17, 17, 128) 114688 ['activation_34[0][0]']

batch_normalization_35 (Ba (None, 17, 17, 128) 384 ['conv2d_35[0][0]']

tchNormalization)

activation_35 (Activation) (None, 17, 17, 128) 0 ['batch_normalization_35[0][0]

']

conv2d_31 (Conv2D) (None, 17, 17, 128) 98304 ['mixed3[0][0]']

conv2d_36 (Conv2D) (None, 17, 17, 128) 114688 ['activation_35[0][0]']

batch_normalization_31 (Ba (None, 17, 17, 128) 384 ['conv2d_31[0][0]']

tchNormalization)

batch_normalization_36 (Ba (None, 17, 17, 128) 384 ['conv2d_36[0][0]']

tchNormalization)

activation_31 (Activation) (None, 17, 17, 128) 0 ['batch_normalization_31[0][0]

']

activation_36 (Activation) (None, 17, 17, 128) 0 ['batch_normalization_36[0][0]

']

conv2d_32 (Conv2D) (None, 17, 17, 128) 114688 ['activation_31[0][0]']

conv2d_37 (Conv2D) (None, 17, 17, 128) 114688 ['activation_36[0][0]']

batch_normalization_32 (Ba (None, 17, 17, 128) 384 ['conv2d_32[0][0]']

tchNormalization)

batch_normalization_37 (Ba (None, 17, 17, 128) 384 ['conv2d_37[0][0]']

tchNormalization)

activation_32 (Activation) (None, 17, 17, 128) 0 ['batch_normalization_32[0][0]

']

activation_37 (Activation) (None, 17, 17, 128) 0 ['batch_normalization_37[0][0]

']

average_pooling2d_3 (Avera (None, 17, 17, 768) 0 ['mixed3[0][0]']

gePooling2D)

conv2d_30 (Conv2D) (None, 17, 17, 192) 147456 ['mixed3[0][0]']

conv2d_33 (Conv2D) (None, 17, 17, 192) 172032 ['activation_32[0][0]']

conv2d_38 (Conv2D) (None, 17, 17, 192) 172032 ['activation_37[0][0]']

conv2d_39 (Conv2D) (None, 17, 17, 192) 147456 ['average_pooling2d_3[0][0]']

batch_normalization_30 (Ba (None, 17, 17, 192) 576 ['conv2d_30[0][0]']

tchNormalization)

batch_normalization_33 (Ba (None, 17, 17, 192) 576 ['conv2d_33[0][0]']

tchNormalization)

batch_normalization_38 (Ba (None, 17, 17, 192) 576 ['conv2d_38[0][0]']

tchNormalization)

batch_normalization_39 (Ba (None, 17, 17, 192) 576 ['conv2d_39[0][0]']

tchNormalization)

activation_30 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_30[0][0]

']

activation_33 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_33[0][0]

']

activation_38 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_38[0][0]

']

activation_39 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_39[0][0]

']

mixed4 (Concatenate) (None, 17, 17, 768) 0 ['activation_30[0][0]',

'activation_33[0][0]',

'activation_38[0][0]',

'activation_39[0][0]']

conv2d_44 (Conv2D) (None, 17, 17, 160) 122880 ['mixed4[0][0]']

batch_normalization_44 (Ba (None, 17, 17, 160) 480 ['conv2d_44[0][0]']

tchNormalization)

activation_44 (Activation) (None, 17, 17, 160) 0 ['batch_normalization_44[0][0]

']

conv2d_45 (Conv2D) (None, 17, 17, 160) 179200 ['activation_44[0][0]']

batch_normalization_45 (Ba (None, 17, 17, 160) 480 ['conv2d_45[0][0]']

tchNormalization)

activation_45 (Activation) (None, 17, 17, 160) 0 ['batch_normalization_45[0][0]

']

conv2d_41 (Conv2D) (None, 17, 17, 160) 122880 ['mixed4[0][0]']

conv2d_46 (Conv2D) (None, 17, 17, 160) 179200 ['activation_45[0][0]']

batch_normalization_41 (Ba (None, 17, 17, 160) 480 ['conv2d_41[0][0]']

tchNormalization)

batch_normalization_46 (Ba (None, 17, 17, 160) 480 ['conv2d_46[0][0]']

tchNormalization)

activation_41 (Activation) (None, 17, 17, 160) 0 ['batch_normalization_41[0][0]

']

activation_46 (Activation) (None, 17, 17, 160) 0 ['batch_normalization_46[0][0]

']

conv2d_42 (Conv2D) (None, 17, 17, 160) 179200 ['activation_41[0][0]']

conv2d_47 (Conv2D) (None, 17, 17, 160) 179200 ['activation_46[0][0]']

batch_normalization_42 (Ba (None, 17, 17, 160) 480 ['conv2d_42[0][0]']

tchNormalization)

batch_normalization_47 (Ba (None, 17, 17, 160) 480 ['conv2d_47[0][0]']

tchNormalization)

activation_42 (Activation) (None, 17, 17, 160) 0 ['batch_normalization_42[0][0]

']

activation_47 (Activation) (None, 17, 17, 160) 0 ['batch_normalization_47[0][0]

']

average_pooling2d_4 (Avera (None, 17, 17, 768) 0 ['mixed4[0][0]']

gePooling2D)

conv2d_40 (Conv2D) (None, 17, 17, 192) 147456 ['mixed4[0][0]']

conv2d_43 (Conv2D) (None, 17, 17, 192) 215040 ['activation_42[0][0]']

conv2d_48 (Conv2D) (None, 17, 17, 192) 215040 ['activation_47[0][0]']

conv2d_49 (Conv2D) (None, 17, 17, 192) 147456 ['average_pooling2d_4[0][0]']

batch_normalization_40 (Ba (None, 17, 17, 192) 576 ['conv2d_40[0][0]']

tchNormalization)

batch_normalization_43 (Ba (None, 17, 17, 192) 576 ['conv2d_43[0][0]']

tchNormalization)

batch_normalization_48 (Ba (None, 17, 17, 192) 576 ['conv2d_48[0][0]']

tchNormalization)

batch_normalization_49 (Ba (None, 17, 17, 192) 576 ['conv2d_49[0][0]']

tchNormalization)

activation_40 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_40[0][0]

']

activation_43 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_43[0][0]

']

activation_48 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_48[0][0]

']

activation_49 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_49[0][0]

']

mixed5 (Concatenate) (None, 17, 17, 768) 0 ['activation_40[0][0]',

'activation_43[0][0]',

'activation_48[0][0]',

'activation_49[0][0]']

conv2d_54 (Conv2D) (None, 17, 17, 160) 122880 ['mixed5[0][0]']

batch_normalization_54 (Ba (None, 17, 17, 160) 480 ['conv2d_54[0][0]']

tchNormalization)

activation_54 (Activation) (None, 17, 17, 160) 0 ['batch_normalization_54[0][0]

']

conv2d_55 (Conv2D) (None, 17, 17, 160) 179200 ['activation_54[0][0]']

batch_normalization_55 (Ba (None, 17, 17, 160) 480 ['conv2d_55[0][0]']

tchNormalization)

activation_55 (Activation) (None, 17, 17, 160) 0 ['batch_normalization_55[0][0]

']

conv2d_51 (Conv2D) (None, 17, 17, 160) 122880 ['mixed5[0][0]']

conv2d_56 (Conv2D) (None, 17, 17, 160) 179200 ['activation_55[0][0]']

batch_normalization_51 (Ba (None, 17, 17, 160) 480 ['conv2d_51[0][0]']

tchNormalization)

batch_normalization_56 (Ba (None, 17, 17, 160) 480 ['conv2d_56[0][0]']

tchNormalization)

activation_51 (Activation) (None, 17, 17, 160) 0 ['batch_normalization_51[0][0]

']

activation_56 (Activation) (None, 17, 17, 160) 0 ['batch_normalization_56[0][0]

']

conv2d_52 (Conv2D) (None, 17, 17, 160) 179200 ['activation_51[0][0]']

conv2d_57 (Conv2D) (None, 17, 17, 160) 179200 ['activation_56[0][0]']

batch_normalization_52 (Ba (None, 17, 17, 160) 480 ['conv2d_52[0][0]']

tchNormalization)

batch_normalization_57 (Ba (None, 17, 17, 160) 480 ['conv2d_57[0][0]']

tchNormalization)

activation_52 (Activation) (None, 17, 17, 160) 0 ['batch_normalization_52[0][0]

']

activation_57 (Activation) (None, 17, 17, 160) 0 ['batch_normalization_57[0][0]

']

average_pooling2d_5 (Avera (None, 17, 17, 768) 0 ['mixed5[0][0]']

gePooling2D)

conv2d_50 (Conv2D) (None, 17, 17, 192) 147456 ['mixed5[0][0]']

conv2d_53 (Conv2D) (None, 17, 17, 192) 215040 ['activation_52[0][0]']

conv2d_58 (Conv2D) (None, 17, 17, 192) 215040 ['activation_57[0][0]']

conv2d_59 (Conv2D) (None, 17, 17, 192) 147456 ['average_pooling2d_5[0][0]']

batch_normalization_50 (Ba (None, 17, 17, 192) 576 ['conv2d_50[0][0]']

tchNormalization)

batch_normalization_53 (Ba (None, 17, 17, 192) 576 ['conv2d_53[0][0]']

tchNormalization)

batch_normalization_58 (Ba (None, 17, 17, 192) 576 ['conv2d_58[0][0]']

tchNormalization)

batch_normalization_59 (Ba (None, 17, 17, 192) 576 ['conv2d_59[0][0]']

tchNormalization)

activation_50 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_50[0][0]

']

activation_53 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_53[0][0]

']

activation_58 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_58[0][0]

']

activation_59 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_59[0][0]

']

mixed6 (Concatenate) (None, 17, 17, 768) 0 ['activation_50[0][0]',

'activation_53[0][0]',

'activation_58[0][0]',

'activation_59[0][0]']

conv2d_64 (Conv2D) (None, 17, 17, 192) 147456 ['mixed6[0][0]']

batch_normalization_64 (Ba (None, 17, 17, 192) 576 ['conv2d_64[0][0]']

tchNormalization)

activation_64 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_64[0][0]

']

conv2d_65 (Conv2D) (None, 17, 17, 192) 258048 ['activation_64[0][0]']

batch_normalization_65 (Ba (None, 17, 17, 192) 576 ['conv2d_65[0][0]']

tchNormalization)

activation_65 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_65[0][0]

']

conv2d_61 (Conv2D) (None, 17, 17, 192) 147456 ['mixed6[0][0]']

conv2d_66 (Conv2D) (None, 17, 17, 192) 258048 ['activation_65[0][0]']

batch_normalization_61 (Ba (None, 17, 17, 192) 576 ['conv2d_61[0][0]']

tchNormalization)

batch_normalization_66 (Ba (None, 17, 17, 192) 576 ['conv2d_66[0][0]']

tchNormalization)

activation_61 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_61[0][0]

']

activation_66 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_66[0][0]

']

conv2d_62 (Conv2D) (None, 17, 17, 192) 258048 ['activation_61[0][0]']

conv2d_67 (Conv2D) (None, 17, 17, 192) 258048 ['activation_66[0][0]']

batch_normalization_62 (Ba (None, 17, 17, 192) 576 ['conv2d_62[0][0]']

tchNormalization)

batch_normalization_67 (Ba (None, 17, 17, 192) 576 ['conv2d_67[0][0]']

tchNormalization)

activation_62 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_62[0][0]

']

activation_67 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_67[0][0]

']

average_pooling2d_6 (Avera (None, 17, 17, 768) 0 ['mixed6[0][0]']

gePooling2D)

conv2d_60 (Conv2D) (None, 17, 17, 192) 147456 ['mixed6[0][0]']

conv2d_63 (Conv2D) (None, 17, 17, 192) 258048 ['activation_62[0][0]']

conv2d_68 (Conv2D) (None, 17, 17, 192) 258048 ['activation_67[0][0]']

conv2d_69 (Conv2D) (None, 17, 17, 192) 147456 ['average_pooling2d_6[0][0]']

batch_normalization_60 (Ba (None, 17, 17, 192) 576 ['conv2d_60[0][0]']

tchNormalization)

batch_normalization_63 (Ba (None, 17, 17, 192) 576 ['conv2d_63[0][0]']

tchNormalization)

batch_normalization_68 (Ba (None, 17, 17, 192) 576 ['conv2d_68[0][0]']

tchNormalization)

batch_normalization_69 (Ba (None, 17, 17, 192) 576 ['conv2d_69[0][0]']

tchNormalization)

activation_60 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_60[0][0]

']

activation_63 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_63[0][0]

']

activation_68 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_68[0][0]

']

activation_69 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_69[0][0]

']

mixed7 (Concatenate) (None, 17, 17, 768) 0 ['activation_60[0][0]',

'activation_63[0][0]',

'activation_68[0][0]',

'activation_69[0][0]']

conv2d_72 (Conv2D) (None, 17, 17, 192) 147456 ['mixed7[0][0]']

batch_normalization_72 (Ba (None, 17, 17, 192) 576 ['conv2d_72[0][0]']

tchNormalization)

activation_72 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_72[0][0]

']

conv2d_73 (Conv2D) (None, 17, 17, 192) 258048 ['activation_72[0][0]']

batch_normalization_73 (Ba (None, 17, 17, 192) 576 ['conv2d_73[0][0]']

tchNormalization)

activation_73 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_73[0][0]

']

conv2d_70 (Conv2D) (None, 17, 17, 192) 147456 ['mixed7[0][0]']

conv2d_74 (Conv2D) (None, 17, 17, 192) 258048 ['activation_73[0][0]']

batch_normalization_70 (Ba (None, 17, 17, 192) 576 ['conv2d_70[0][0]']

tchNormalization)

batch_normalization_74 (Ba (None, 17, 17, 192) 576 ['conv2d_74[0][0]']

tchNormalization)

activation_70 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_70[0][0]

']

activation_74 (Activation) (None, 17, 17, 192) 0 ['batch_normalization_74[0][0]

']

conv2d_71 (Conv2D) (None, 8, 8, 320) 552960 ['activation_70[0][0]']

conv2d_75 (Conv2D) (None, 8, 8, 192) 331776 ['activation_74[0][0]']

batch_normalization_71 (Ba (None, 8, 8, 320) 960 ['conv2d_71[0][0]']

tchNormalization)

batch_normalization_75 (Ba (None, 8, 8, 192) 576 ['conv2d_75[0][0]']

tchNormalization)

activation_71 (Activation) (None, 8, 8, 320) 0 ['batch_normalization_71[0][0]

']

activation_75 (Activation) (None, 8, 8, 192) 0 ['batch_normalization_75[0][0]

']

max_pooling2d_3 (MaxPoolin (None, 8, 8, 768) 0 ['mixed7[0][0]']

g2D)

mixed8 (Concatenate) (None, 8, 8, 1280) 0 ['activation_71[0][0]',

'activation_75[0][0]',

'max_pooling2d_3[0][0]']

conv2d_80 (Conv2D) (None, 8, 8, 448) 573440 ['mixed8[0][0]']

batch_normalization_80 (Ba (None, 8, 8, 448) 1344 ['conv2d_80[0][0]']

tchNormalization)

activation_80 (Activation) (None, 8, 8, 448) 0 ['batch_normalization_80[0][0]

']

conv2d_77 (Conv2D) (None, 8, 8, 384) 491520 ['mixed8[0][0]']

conv2d_81 (Conv2D) (None, 8, 8, 384) 1548288 ['activation_80[0][0]']

batch_normalization_77 (Ba (None, 8, 8, 384) 1152 ['conv2d_77[0][0]']

tchNormalization)

batch_normalization_81 (Ba (None, 8, 8, 384) 1152 ['conv2d_81[0][0]']

tchNormalization)

activation_77 (Activation) (None, 8, 8, 384) 0 ['batch_normalization_77[0][0]

']

activation_81 (Activation) (None, 8, 8, 384) 0 ['batch_normalization_81[0][0]

']

conv2d_78 (Conv2D) (None, 8, 8, 384) 442368 ['activation_77[0][0]']

conv2d_79 (Conv2D) (None, 8, 8, 384) 442368 ['activation_77[0][0]']

conv2d_82 (Conv2D) (None, 8, 8, 384) 442368 ['activation_81[0][0]']

conv2d_83 (Conv2D) (None, 8, 8, 384) 442368 ['activation_81[0][0]']

average_pooling2d_7 (Avera (None, 8, 8, 1280) 0 ['mixed8[0][0]']

gePooling2D)

conv2d_76 (Conv2D) (None, 8, 8, 320) 409600 ['mixed8[0][0]']

batch_normalization_78 (Ba (None, 8, 8, 384) 1152 ['conv2d_78[0][0]']

tchNormalization)

batch_normalization_79 (Ba (None, 8, 8, 384) 1152 ['conv2d_79[0][0]']

tchNormalization)

batch_normalization_82 (Ba (None, 8, 8, 384) 1152 ['conv2d_82[0][0]']

tchNormalization)

batch_normalization_83 (Ba (None, 8, 8, 384) 1152 ['conv2d_83[0][0]']

tchNormalization)

conv2d_84 (Conv2D) (None, 8, 8, 192) 245760 ['average_pooling2d_7[0][0]']

batch_normalization_76 (Ba (None, 8, 8, 320) 960 ['conv2d_76[0][0]']

tchNormalization)

activation_78 (Activation) (None, 8, 8, 384) 0 ['batch_normalization_78[0][0]

']

activation_79 (Activation) (None, 8, 8, 384) 0 ['batch_normalization_79[0][0]

']

activation_82 (Activation) (None, 8, 8, 384) 0 ['batch_normalization_82[0][0]

']

activation_83 (Activation) (None, 8, 8, 384) 0 ['batch_normalization_83[0][0]

']

batch_normalization_84 (Ba (None, 8, 8, 192) 576 ['conv2d_84[0][0]']

tchNormalization)

activation_76 (Activation) (None, 8, 8, 320) 0 ['batch_normalization_76[0][0]

']

mixed9_0 (Concatenate) (None, 8, 8, 768) 0 ['activation_78[0][0]',

'activation_79[0][0]']

concatenate (Concatenate) (None, 8, 8, 768) 0 ['activation_82[0][0]',

'activation_83[0][0]']

activation_84 (Activation) (None, 8, 8, 192) 0 ['batch_normalization_84[0][0]

']

mixed9 (Concatenate) (None, 8, 8, 2048) 0 ['activation_76[0][0]',

'mixed9_0[0][0]',

'concatenate[0][0]',

'activation_84[0][0]']

conv2d_89 (Conv2D) (None, 8, 8, 448) 917504 ['mixed9[0][0]']

batch_normalization_89 (Ba (None, 8, 8, 448) 1344 ['conv2d_89[0][0]']

tchNormalization)

activation_89 (Activation) (None, 8, 8, 448) 0 ['batch_normalization_89[0][0]

']

conv2d_86 (Conv2D) (None, 8, 8, 384) 786432 ['mixed9[0][0]']

conv2d_90 (Conv2D) (None, 8, 8, 384) 1548288 ['activation_89[0][0]']

batch_normalization_86 (Ba (None, 8, 8, 384) 1152 ['conv2d_86[0][0]']

tchNormalization)

batch_normalization_90 (Ba (None, 8, 8, 384) 1152 ['conv2d_90[0][0]']

tchNormalization)

activation_86 (Activation) (None, 8, 8, 384) 0 ['batch_normalization_86[0][0]

']

activation_90 (Activation) (None, 8, 8, 384) 0 ['batch_normalization_90[0][0]

']

conv2d_87 (Conv2D) (None, 8, 8, 384) 442368 ['activation_86[0][0]']

conv2d_88 (Conv2D) (None, 8, 8, 384) 442368 ['activation_86[0][0]']

conv2d_91 (Conv2D) (None, 8, 8, 384) 442368 ['activation_90[0][0]']

conv2d_92 (Conv2D) (None, 8, 8, 384) 442368 ['activation_90[0][0]']

average_pooling2d_8 (Avera (None, 8, 8, 2048) 0 ['mixed9[0][0]']

gePooling2D)

conv2d_85 (Conv2D) (None, 8, 8, 320) 655360 ['mixed9[0][0]']

batch_normalization_87 (Ba (None, 8, 8, 384) 1152 ['conv2d_87[0][0]']

tchNormalization)

batch_normalization_88 (Ba (None, 8, 8, 384) 1152 ['conv2d_88[0][0]']

tchNormalization)

batch_normalization_91 (Ba (None, 8, 8, 384) 1152 ['conv2d_91[0][0]']

tchNormalization)

batch_normalization_92 (Ba (None, 8, 8, 384) 1152 ['conv2d_92[0][0]']

tchNormalization)

conv2d_93 (Conv2D) (None, 8, 8, 192) 393216 ['average_pooling2d_8[0][0]']

batch_normalization_85 (Ba (None, 8, 8, 320) 960 ['conv2d_85[0][0]']

tchNormalization)

activation_87 (Activation) (None, 8, 8, 384) 0 ['batch_normalization_87[0][0]

']

activation_88 (Activation) (None, 8, 8, 384) 0 ['batch_normalization_88[0][0]

']

activation_91 (Activation) (None, 8, 8, 384) 0 ['batch_normalization_91[0][0]

']

activation_92 (Activation) (None, 8, 8, 384) 0 ['batch_normalization_92[0][0]

']

batch_normalization_93 (Ba (None, 8, 8, 192) 576 ['conv2d_93[0][0]']

tchNormalization)

activation_85 (Activation) (None, 8, 8, 320) 0 ['batch_normalization_85[0][0]

']

mixed9_1 (Concatenate) (None, 8, 8, 768) 0 ['activation_87[0][0]',

'activation_88[0][0]']

concatenate_1 (Concatenate (None, 8, 8, 768) 0 ['activation_91[0][0]',

) 'activation_92[0][0]']

activation_93 (Activation) (None, 8, 8, 192) 0 ['batch_normalization_93[0][0]

']

mixed10 (Concatenate) (None, 8, 8, 2048) 0 ['activation_85[0][0]',

'mixed9_1[0][0]',

'concatenate_1[0][0]',

'activation_93[0][0]']

avg_pool (GlobalAveragePoo (None, 2048) 0 ['mixed10[0][0]']

ling2D)

predictions (Dense) (None, 1000) 2049000 ['avg_pool[0][0]']

==================================================================================================

Total params: 23851784 (90.99 MB)

Trainable params: 23817352 (90.86 MB)

Non-trainable params: 34432 (134.50 KB)

__________________________________________________________________________________________________

As you can see, it’s a very deep network and compared to VGG-19, it’s harder to choose which layers to choose to extract features from.

- Notice that the Conv2D layers are named from

conv2d,conv2d_1…conv2d_93, for a total of 94 conv2d layers.- So the second conv2D layer is named

conv2d_1.

- So the second conv2D layer is named

- For the purpose of grading, please choose the following

- For the content layer: choose the Conv2D layer indexed at

88. - For the style layers, please choose the first

fiveconv2D layers near the input end of the model.- Note the numbering as mentioned in these instructions.

- For the content layer: choose the Conv2D layer indexed at

Choose intermediate layers from the network to represent the style and content of the image:

### START CODE HERE ###

# choose the content layer and put in a list

content_layers = [

"conv2d_87",

]

# choose the five style layers of interest

style_layers = [

"conv2d",

"conv2d_1",

"conv2d_2",

"conv2d_3",

"conv2d_4",

]

# combine the content and style layers into one list

content_and_style_layers = style_layers + content_layers

### END CODE HERE ###

# count the number of content layers and style layers.

# you will use these counts later in the assignment

NUM_CONTENT_LAYERS = len(content_layers)

NUM_STYLE_LAYERS = len(style_layers)

content_and_style_layers

['conv2d', 'conv2d_1', 'conv2d_2', 'conv2d_3', 'conv2d_4', 'conv2d_87']

You can now setup your model to output the selected layers.

def inception_model(layer_names):

""" Creates a inception model that returns a list of intermediate output values.

args:

layer_names: a list of strings, representing the names of the desired content and style layers

returns:

A model that takes the regular inception v3 input and outputs just the content and style layers.

"""

### START CODE HERE ###

# Load InceptionV3 with the imagenet weights and **without** the fully-connected layer at the top of the network

inception = tf.keras.applications.inception_v3.InceptionV3(

include_top=False,

weights="imagenet"

)

# Freeze the weights of the model's layers (make them not trainable)

inception.trainable = False

# Create a list of layer objects that are specified by layer_names

output_layers = [inception.get_layer(name).output for name in layer_names]

# Create the model that outputs the content and style layers

model = tf.keras.Model(inputs=inception.input, outputs=output_layers)

# return the model

return model

### END CODE HERE ###

Create an instance of the content and style model using the function that you just defined

K.clear_session()

### START CODE HERE ###

inception = inception_model(content_and_style_layers)

### END CODE HERE ###

inception.summary()

Model: "model"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, None, None, 3)] 0 []

conv2d (Conv2D) (None, None, None, 32) 864 ['input_1[0][0]']

batch_normalization (Batch (None, None, None, 32) 96 ['conv2d[0][0]']

Normalization)

activation (Activation) (None, None, None, 32) 0 ['batch_normalization[0][0]']

conv2d_1 (Conv2D) (None, None, None, 32) 9216 ['activation[0][0]']

batch_normalization_1 (Bat (None, None, None, 32) 96 ['conv2d_1[0][0]']

chNormalization)

activation_1 (Activation) (None, None, None, 32) 0 ['batch_normalization_1[0][0]'

]

conv2d_2 (Conv2D) (None, None, None, 64) 18432 ['activation_1[0][0]']

batch_normalization_2 (Bat (None, None, None, 64) 192 ['conv2d_2[0][0]']

chNormalization)

activation_2 (Activation) (None, None, None, 64) 0 ['batch_normalization_2[0][0]'

]

max_pooling2d (MaxPooling2 (None, None, None, 64) 0 ['activation_2[0][0]']

D)

conv2d_3 (Conv2D) (None, None, None, 80) 5120 ['max_pooling2d[0][0]']

batch_normalization_3 (Bat (None, None, None, 80) 240 ['conv2d_3[0][0]']

chNormalization)

activation_3 (Activation) (None, None, None, 80) 0 ['batch_normalization_3[0][0]'

]

conv2d_4 (Conv2D) (None, None, None, 192) 138240 ['activation_3[0][0]']

batch_normalization_4 (Bat (None, None, None, 192) 576 ['conv2d_4[0][0]']

chNormalization)

activation_4 (Activation) (None, None, None, 192) 0 ['batch_normalization_4[0][0]'

]

max_pooling2d_1 (MaxPoolin (None, None, None, 192) 0 ['activation_4[0][0]']

g2D)

conv2d_8 (Conv2D) (None, None, None, 64) 12288 ['max_pooling2d_1[0][0]']

batch_normalization_8 (Bat (None, None, None, 64) 192 ['conv2d_8[0][0]']

chNormalization)

activation_8 (Activation) (None, None, None, 64) 0 ['batch_normalization_8[0][0]'

]

conv2d_6 (Conv2D) (None, None, None, 48) 9216 ['max_pooling2d_1[0][0]']

conv2d_9 (Conv2D) (None, None, None, 96) 55296 ['activation_8[0][0]']

batch_normalization_6 (Bat (None, None, None, 48) 144 ['conv2d_6[0][0]']

chNormalization)

batch_normalization_9 (Bat (None, None, None, 96) 288 ['conv2d_9[0][0]']

chNormalization)

activation_6 (Activation) (None, None, None, 48) 0 ['batch_normalization_6[0][0]'

]

activation_9 (Activation) (None, None, None, 96) 0 ['batch_normalization_9[0][0]'

]

average_pooling2d (Average (None, None, None, 192) 0 ['max_pooling2d_1[0][0]']

Pooling2D)

conv2d_5 (Conv2D) (None, None, None, 64) 12288 ['max_pooling2d_1[0][0]']

conv2d_7 (Conv2D) (None, None, None, 64) 76800 ['activation_6[0][0]']

conv2d_10 (Conv2D) (None, None, None, 96) 82944 ['activation_9[0][0]']

conv2d_11 (Conv2D) (None, None, None, 32) 6144 ['average_pooling2d[0][0]']

batch_normalization_5 (Bat (None, None, None, 64) 192 ['conv2d_5[0][0]']

chNormalization)

batch_normalization_7 (Bat (None, None, None, 64) 192 ['conv2d_7[0][0]']

chNormalization)

batch_normalization_10 (Ba (None, None, None, 96) 288 ['conv2d_10[0][0]']

tchNormalization)

batch_normalization_11 (Ba (None, None, None, 32) 96 ['conv2d_11[0][0]']

tchNormalization)

activation_5 (Activation) (None, None, None, 64) 0 ['batch_normalization_5[0][0]'

]

activation_7 (Activation) (None, None, None, 64) 0 ['batch_normalization_7[0][0]'

]

activation_10 (Activation) (None, None, None, 96) 0 ['batch_normalization_10[0][0]

']

activation_11 (Activation) (None, None, None, 32) 0 ['batch_normalization_11[0][0]

']

mixed0 (Concatenate) (None, None, None, 256) 0 ['activation_5[0][0]',

'activation_7[0][0]',

'activation_10[0][0]',

'activation_11[0][0]']

conv2d_15 (Conv2D) (None, None, None, 64) 16384 ['mixed0[0][0]']

batch_normalization_15 (Ba (None, None, None, 64) 192 ['conv2d_15[0][0]']

tchNormalization)

activation_15 (Activation) (None, None, None, 64) 0 ['batch_normalization_15[0][0]

']

conv2d_13 (Conv2D) (None, None, None, 48) 12288 ['mixed0[0][0]']

conv2d_16 (Conv2D) (None, None, None, 96) 55296 ['activation_15[0][0]']

batch_normalization_13 (Ba (None, None, None, 48) 144 ['conv2d_13[0][0]']

tchNormalization)

batch_normalization_16 (Ba (None, None, None, 96) 288 ['conv2d_16[0][0]']

tchNormalization)

activation_13 (Activation) (None, None, None, 48) 0 ['batch_normalization_13[0][0]

']

activation_16 (Activation) (None, None, None, 96) 0 ['batch_normalization_16[0][0]

']

average_pooling2d_1 (Avera (None, None, None, 256) 0 ['mixed0[0][0]']

gePooling2D)

conv2d_12 (Conv2D) (None, None, None, 64) 16384 ['mixed0[0][0]']

conv2d_14 (Conv2D) (None, None, None, 64) 76800 ['activation_13[0][0]']

conv2d_17 (Conv2D) (None, None, None, 96) 82944 ['activation_16[0][0]']

conv2d_18 (Conv2D) (None, None, None, 64) 16384 ['average_pooling2d_1[0][0]']

batch_normalization_12 (Ba (None, None, None, 64) 192 ['conv2d_12[0][0]']

tchNormalization)

batch_normalization_14 (Ba (None, None, None, 64) 192 ['conv2d_14[0][0]']

tchNormalization)

batch_normalization_17 (Ba (None, None, None, 96) 288 ['conv2d_17[0][0]']

tchNormalization)

batch_normalization_18 (Ba (None, None, None, 64) 192 ['conv2d_18[0][0]']

tchNormalization)

activation_12 (Activation) (None, None, None, 64) 0 ['batch_normalization_12[0][0]

']

activation_14 (Activation) (None, None, None, 64) 0 ['batch_normalization_14[0][0]

']

activation_17 (Activation) (None, None, None, 96) 0 ['batch_normalization_17[0][0]

']

activation_18 (Activation) (None, None, None, 64) 0 ['batch_normalization_18[0][0]

']

mixed1 (Concatenate) (None, None, None, 288) 0 ['activation_12[0][0]',

'activation_14[0][0]',

'activation_17[0][0]',

'activation_18[0][0]']

conv2d_22 (Conv2D) (None, None, None, 64) 18432 ['mixed1[0][0]']

batch_normalization_22 (Ba (None, None, None, 64) 192 ['conv2d_22[0][0]']

tchNormalization)

activation_22 (Activation) (None, None, None, 64) 0 ['batch_normalization_22[0][0]

']

conv2d_20 (Conv2D) (None, None, None, 48) 13824 ['mixed1[0][0]']

conv2d_23 (Conv2D) (None, None, None, 96) 55296 ['activation_22[0][0]']

batch_normalization_20 (Ba (None, None, None, 48) 144 ['conv2d_20[0][0]']

tchNormalization)

batch_normalization_23 (Ba (None, None, None, 96) 288 ['conv2d_23[0][0]']

tchNormalization)

activation_20 (Activation) (None, None, None, 48) 0 ['batch_normalization_20[0][0]

']

activation_23 (Activation) (None, None, None, 96) 0 ['batch_normalization_23[0][0]

']

average_pooling2d_2 (Avera (None, None, None, 288) 0 ['mixed1[0][0]']

gePooling2D)

conv2d_19 (Conv2D) (None, None, None, 64) 18432 ['mixed1[0][0]']

conv2d_21 (Conv2D) (None, None, None, 64) 76800 ['activation_20[0][0]']

conv2d_24 (Conv2D) (None, None, None, 96) 82944 ['activation_23[0][0]']

conv2d_25 (Conv2D) (None, None, None, 64) 18432 ['average_pooling2d_2[0][0]']

batch_normalization_19 (Ba (None, None, None, 64) 192 ['conv2d_19[0][0]']

tchNormalization)

batch_normalization_21 (Ba (None, None, None, 64) 192 ['conv2d_21[0][0]']

tchNormalization)

batch_normalization_24 (Ba (None, None, None, 96) 288 ['conv2d_24[0][0]']

tchNormalization)

batch_normalization_25 (Ba (None, None, None, 64) 192 ['conv2d_25[0][0]']

tchNormalization)

activation_19 (Activation) (None, None, None, 64) 0 ['batch_normalization_19[0][0]

']

activation_21 (Activation) (None, None, None, 64) 0 ['batch_normalization_21[0][0]

']

activation_24 (Activation) (None, None, None, 96) 0 ['batch_normalization_24[0][0]

']

activation_25 (Activation) (None, None, None, 64) 0 ['batch_normalization_25[0][0]

']

mixed2 (Concatenate) (None, None, None, 288) 0 ['activation_19[0][0]',

'activation_21[0][0]',

'activation_24[0][0]',

'activation_25[0][0]']

conv2d_27 (Conv2D) (None, None, None, 64) 18432 ['mixed2[0][0]']

batch_normalization_27 (Ba (None, None, None, 64) 192 ['conv2d_27[0][0]']

tchNormalization)

activation_27 (Activation) (None, None, None, 64) 0 ['batch_normalization_27[0][0]

']

conv2d_28 (Conv2D) (None, None, None, 96) 55296 ['activation_27[0][0]']

batch_normalization_28 (Ba (None, None, None, 96) 288 ['conv2d_28[0][0]']

tchNormalization)

activation_28 (Activation) (None, None, None, 96) 0 ['batch_normalization_28[0][0]

']

conv2d_26 (Conv2D) (None, None, None, 384) 995328 ['mixed2[0][0]']

conv2d_29 (Conv2D) (None, None, None, 96) 82944 ['activation_28[0][0]']

batch_normalization_26 (Ba (None, None, None, 384) 1152 ['conv2d_26[0][0]']

tchNormalization)

batch_normalization_29 (Ba (None, None, None, 96) 288 ['conv2d_29[0][0]']

tchNormalization)

activation_26 (Activation) (None, None, None, 384) 0 ['batch_normalization_26[0][0]

']

activation_29 (Activation) (None, None, None, 96) 0 ['batch_normalization_29[0][0]

']

max_pooling2d_2 (MaxPoolin (None, None, None, 288) 0 ['mixed2[0][0]']

g2D)

mixed3 (Concatenate) (None, None, None, 768) 0 ['activation_26[0][0]',

'activation_29[0][0]',

'max_pooling2d_2[0][0]']

conv2d_34 (Conv2D) (None, None, None, 128) 98304 ['mixed3[0][0]']

batch_normalization_34 (Ba (None, None, None, 128) 384 ['conv2d_34[0][0]']

tchNormalization)

activation_34 (Activation) (None, None, None, 128) 0 ['batch_normalization_34[0][0]

']

conv2d_35 (Conv2D) (None, None, None, 128) 114688 ['activation_34[0][0]']

batch_normalization_35 (Ba (None, None, None, 128) 384 ['conv2d_35[0][0]']

tchNormalization)

activation_35 (Activation) (None, None, None, 128) 0 ['batch_normalization_35[0][0]

']

conv2d_31 (Conv2D) (None, None, None, 128) 98304 ['mixed3[0][0]']

conv2d_36 (Conv2D) (None, None, None, 128) 114688 ['activation_35[0][0]']

batch_normalization_31 (Ba (None, None, None, 128) 384 ['conv2d_31[0][0]']

tchNormalization)

batch_normalization_36 (Ba (None, None, None, 128) 384 ['conv2d_36[0][0]']

tchNormalization)

activation_31 (Activation) (None, None, None, 128) 0 ['batch_normalization_31[0][0]

']

activation_36 (Activation) (None, None, None, 128) 0 ['batch_normalization_36[0][0]

']

conv2d_32 (Conv2D) (None, None, None, 128) 114688 ['activation_31[0][0]']

conv2d_37 (Conv2D) (None, None, None, 128) 114688 ['activation_36[0][0]']

batch_normalization_32 (Ba (None, None, None, 128) 384 ['conv2d_32[0][0]']

tchNormalization)

batch_normalization_37 (Ba (None, None, None, 128) 384 ['conv2d_37[0][0]']

tchNormalization)

activation_32 (Activation) (None, None, None, 128) 0 ['batch_normalization_32[0][0]

']

activation_37 (Activation) (None, None, None, 128) 0 ['batch_normalization_37[0][0]

']

average_pooling2d_3 (Avera (None, None, None, 768) 0 ['mixed3[0][0]']

gePooling2D)

conv2d_30 (Conv2D) (None, None, None, 192) 147456 ['mixed3[0][0]']

conv2d_33 (Conv2D) (None, None, None, 192) 172032 ['activation_32[0][0]']

conv2d_38 (Conv2D) (None, None, None, 192) 172032 ['activation_37[0][0]']

conv2d_39 (Conv2D) (None, None, None, 192) 147456 ['average_pooling2d_3[0][0]']

batch_normalization_30 (Ba (None, None, None, 192) 576 ['conv2d_30[0][0]']

tchNormalization)

batch_normalization_33 (Ba (None, None, None, 192) 576 ['conv2d_33[0][0]']

tchNormalization)

batch_normalization_38 (Ba (None, None, None, 192) 576 ['conv2d_38[0][0]']

tchNormalization)

batch_normalization_39 (Ba (None, None, None, 192) 576 ['conv2d_39[0][0]']

tchNormalization)

activation_30 (Activation) (None, None, None, 192) 0 ['batch_normalization_30[0][0]

']

activation_33 (Activation) (None, None, None, 192) 0 ['batch_normalization_33[0][0]

']

activation_38 (Activation) (None, None, None, 192) 0 ['batch_normalization_38[0][0]

']

activation_39 (Activation) (None, None, None, 192) 0 ['batch_normalization_39[0][0]

']

mixed4 (Concatenate) (None, None, None, 768) 0 ['activation_30[0][0]',

'activation_33[0][0]',

'activation_38[0][0]',

'activation_39[0][0]']

conv2d_44 (Conv2D) (None, None, None, 160) 122880 ['mixed4[0][0]']

batch_normalization_44 (Ba (None, None, None, 160) 480 ['conv2d_44[0][0]']

tchNormalization)

activation_44 (Activation) (None, None, None, 160) 0 ['batch_normalization_44[0][0]

']

conv2d_45 (Conv2D) (None, None, None, 160) 179200 ['activation_44[0][0]']

batch_normalization_45 (Ba (None, None, None, 160) 480 ['conv2d_45[0][0]']

tchNormalization)

activation_45 (Activation) (None, None, None, 160) 0 ['batch_normalization_45[0][0]

']

conv2d_41 (Conv2D) (None, None, None, 160) 122880 ['mixed4[0][0]']

conv2d_46 (Conv2D) (None, None, None, 160) 179200 ['activation_45[0][0]']

batch_normalization_41 (Ba (None, None, None, 160) 480 ['conv2d_41[0][0]']

tchNormalization)

batch_normalization_46 (Ba (None, None, None, 160) 480 ['conv2d_46[0][0]']

tchNormalization)

activation_41 (Activation) (None, None, None, 160) 0 ['batch_normalization_41[0][0]

']

activation_46 (Activation) (None, None, None, 160) 0 ['batch_normalization_46[0][0]

']

conv2d_42 (Conv2D) (None, None, None, 160) 179200 ['activation_41[0][0]']

conv2d_47 (Conv2D) (None, None, None, 160) 179200 ['activation_46[0][0]']

batch_normalization_42 (Ba (None, None, None, 160) 480 ['conv2d_42[0][0]']

tchNormalization)

batch_normalization_47 (Ba (None, None, None, 160) 480 ['conv2d_47[0][0]']

tchNormalization)

activation_42 (Activation) (None, None, None, 160) 0 ['batch_normalization_42[0][0]

']

activation_47 (Activation) (None, None, None, 160) 0 ['batch_normalization_47[0][0]

']

average_pooling2d_4 (Avera (None, None, None, 768) 0 ['mixed4[0][0]']

gePooling2D)

conv2d_40 (Conv2D) (None, None, None, 192) 147456 ['mixed4[0][0]']

conv2d_43 (Conv2D) (None, None, None, 192) 215040 ['activation_42[0][0]']

conv2d_48 (Conv2D) (None, None, None, 192) 215040 ['activation_47[0][0]']

conv2d_49 (Conv2D) (None, None, None, 192) 147456 ['average_pooling2d_4[0][0]']

batch_normalization_40 (Ba (None, None, None, 192) 576 ['conv2d_40[0][0]']

tchNormalization)

batch_normalization_43 (Ba (None, None, None, 192) 576 ['conv2d_43[0][0]']

tchNormalization)

batch_normalization_48 (Ba (None, None, None, 192) 576 ['conv2d_48[0][0]']

tchNormalization)

batch_normalization_49 (Ba (None, None, None, 192) 576 ['conv2d_49[0][0]']

tchNormalization)

activation_40 (Activation) (None, None, None, 192) 0 ['batch_normalization_40[0][0]

']

activation_43 (Activation) (None, None, None, 192) 0 ['batch_normalization_43[0][0]

']

activation_48 (Activation) (None, None, None, 192) 0 ['batch_normalization_48[0][0]

']

activation_49 (Activation) (None, None, None, 192) 0 ['batch_normalization_49[0][0]

']

mixed5 (Concatenate) (None, None, None, 768) 0 ['activation_40[0][0]',

'activation_43[0][0]',

'activation_48[0][0]',

'activation_49[0][0]']

conv2d_54 (Conv2D) (None, None, None, 160) 122880 ['mixed5[0][0]']

batch_normalization_54 (Ba (None, None, None, 160) 480 ['conv2d_54[0][0]']

tchNormalization)

activation_54 (Activation) (None, None, None, 160) 0 ['batch_normalization_54[0][0]

']

conv2d_55 (Conv2D) (None, None, None, 160) 179200 ['activation_54[0][0]']

batch_normalization_55 (Ba (None, None, None, 160) 480 ['conv2d_55[0][0]']

tchNormalization)

activation_55 (Activation) (None, None, None, 160) 0 ['batch_normalization_55[0][0]

']

conv2d_51 (Conv2D) (None, None, None, 160) 122880 ['mixed5[0][0]']

conv2d_56 (Conv2D) (None, None, None, 160) 179200 ['activation_55[0][0]']

batch_normalization_51 (Ba (None, None, None, 160) 480 ['conv2d_51[0][0]']

tchNormalization)

batch_normalization_56 (Ba (None, None, None, 160) 480 ['conv2d_56[0][0]']

tchNormalization)

activation_51 (Activation) (None, None, None, 160) 0 ['batch_normalization_51[0][0]

']

activation_56 (Activation) (None, None, None, 160) 0 ['batch_normalization_56[0][0]

']

conv2d_52 (Conv2D) (None, None, None, 160) 179200 ['activation_51[0][0]']

conv2d_57 (Conv2D) (None, None, None, 160) 179200 ['activation_56[0][0]']

batch_normalization_52 (Ba (None, None, None, 160) 480 ['conv2d_52[0][0]']

tchNormalization)

batch_normalization_57 (Ba (None, None, None, 160) 480 ['conv2d_57[0][0]']

tchNormalization)

activation_52 (Activation) (None, None, None, 160) 0 ['batch_normalization_52[0][0]

']

activation_57 (Activation) (None, None, None, 160) 0 ['batch_normalization_57[0][0]

']

average_pooling2d_5 (Avera (None, None, None, 768) 0 ['mixed5[0][0]']

gePooling2D)

conv2d_50 (Conv2D) (None, None, None, 192) 147456 ['mixed5[0][0]']

conv2d_53 (Conv2D) (None, None, None, 192) 215040 ['activation_52[0][0]']

conv2d_58 (Conv2D) (None, None, None, 192) 215040 ['activation_57[0][0]']

conv2d_59 (Conv2D) (None, None, None, 192) 147456 ['average_pooling2d_5[0][0]']

batch_normalization_50 (Ba (None, None, None, 192) 576 ['conv2d_50[0][0]']

tchNormalization)

batch_normalization_53 (Ba (None, None, None, 192) 576 ['conv2d_53[0][0]']

tchNormalization)

batch_normalization_58 (Ba (None, None, None, 192) 576 ['conv2d_58[0][0]']

tchNormalization)

batch_normalization_59 (Ba (None, None, None, 192) 576 ['conv2d_59[0][0]']

tchNormalization)

activation_50 (Activation) (None, None, None, 192) 0 ['batch_normalization_50[0][0]

']

activation_53 (Activation) (None, None, None, 192) 0 ['batch_normalization_53[0][0]

']

activation_58 (Activation) (None, None, None, 192) 0 ['batch_normalization_58[0][0]

']

activation_59 (Activation) (None, None, None, 192) 0 ['batch_normalization_59[0][0]

']

mixed6 (Concatenate) (None, None, None, 768) 0 ['activation_50[0][0]',

'activation_53[0][0]',

'activation_58[0][0]',

'activation_59[0][0]']

conv2d_64 (Conv2D) (None, None, None, 192) 147456 ['mixed6[0][0]']

batch_normalization_64 (Ba (None, None, None, 192) 576 ['conv2d_64[0][0]']

tchNormalization)

activation_64 (Activation) (None, None, None, 192) 0 ['batch_normalization_64[0][0]

']

conv2d_65 (Conv2D) (None, None, None, 192) 258048 ['activation_64[0][0]']

batch_normalization_65 (Ba (None, None, None, 192) 576 ['conv2d_65[0][0]']

tchNormalization)

activation_65 (Activation) (None, None, None, 192) 0 ['batch_normalization_65[0][0]

']

conv2d_61 (Conv2D) (None, None, None, 192) 147456 ['mixed6[0][0]']

conv2d_66 (Conv2D) (None, None, None, 192) 258048 ['activation_65[0][0]']

batch_normalization_61 (Ba (None, None, None, 192) 576 ['conv2d_61[0][0]']

tchNormalization)

batch_normalization_66 (Ba (None, None, None, 192) 576 ['conv2d_66[0][0]']

tchNormalization)

activation_61 (Activation) (None, None, None, 192) 0 ['batch_normalization_61[0][0]

']

activation_66 (Activation) (None, None, None, 192) 0 ['batch_normalization_66[0][0]

']

conv2d_62 (Conv2D) (None, None, None, 192) 258048 ['activation_61[0][0]']

conv2d_67 (Conv2D) (None, None, None, 192) 258048 ['activation_66[0][0]']

batch_normalization_62 (Ba (None, None, None, 192) 576 ['conv2d_62[0][0]']

tchNormalization)

batch_normalization_67 (Ba (None, None, None, 192) 576 ['conv2d_67[0][0]']

tchNormalization)

activation_62 (Activation) (None, None, None, 192) 0 ['batch_normalization_62[0][0]

']

activation_67 (Activation) (None, None, None, 192) 0 ['batch_normalization_67[0][0]

']

average_pooling2d_6 (Avera (None, None, None, 768) 0 ['mixed6[0][0]']

gePooling2D)

conv2d_60 (Conv2D) (None, None, None, 192) 147456 ['mixed6[0][0]']

conv2d_63 (Conv2D) (None, None, None, 192) 258048 ['activation_62[0][0]']

conv2d_68 (Conv2D) (None, None, None, 192) 258048 ['activation_67[0][0]']

conv2d_69 (Conv2D) (None, None, None, 192) 147456 ['average_pooling2d_6[0][0]']

batch_normalization_60 (Ba (None, None, None, 192) 576 ['conv2d_60[0][0]']

tchNormalization)

batch_normalization_63 (Ba (None, None, None, 192) 576 ['conv2d_63[0][0]']

tchNormalization)

batch_normalization_68 (Ba (None, None, None, 192) 576 ['conv2d_68[0][0]']

tchNormalization)

batch_normalization_69 (Ba (None, None, None, 192) 576 ['conv2d_69[0][0]']

tchNormalization)

activation_60 (Activation) (None, None, None, 192) 0 ['batch_normalization_60[0][0]

']

activation_63 (Activation) (None, None, None, 192) 0 ['batch_normalization_63[0][0]

']

activation_68 (Activation) (None, None, None, 192) 0 ['batch_normalization_68[0][0]

']

activation_69 (Activation) (None, None, None, 192) 0 ['batch_normalization_69[0][0]

']

mixed7 (Concatenate) (None, None, None, 768) 0 ['activation_60[0][0]',

'activation_63[0][0]',

'activation_68[0][0]',

'activation_69[0][0]']

conv2d_72 (Conv2D) (None, None, None, 192) 147456 ['mixed7[0][0]']

batch_normalization_72 (Ba (None, None, None, 192) 576 ['conv2d_72[0][0]']

tchNormalization)

activation_72 (Activation) (None, None, None, 192) 0 ['batch_normalization_72[0][0]

']

conv2d_73 (Conv2D) (None, None, None, 192) 258048 ['activation_72[0][0]']

batch_normalization_73 (Ba (None, None, None, 192) 576 ['conv2d_73[0][0]']

tchNormalization)

activation_73 (Activation) (None, None, None, 192) 0 ['batch_normalization_73[0][0]

']

conv2d_70 (Conv2D) (None, None, None, 192) 147456 ['mixed7[0][0]']

conv2d_74 (Conv2D) (None, None, None, 192) 258048 ['activation_73[0][0]']

batch_normalization_70 (Ba (None, None, None, 192) 576 ['conv2d_70[0][0]']

tchNormalization)

batch_normalization_74 (Ba (None, None, None, 192) 576 ['conv2d_74[0][0]']

tchNormalization)

activation_70 (Activation) (None, None, None, 192) 0 ['batch_normalization_70[0][0]

']

activation_74 (Activation) (None, None, None, 192) 0 ['batch_normalization_74[0][0]

']

conv2d_71 (Conv2D) (None, None, None, 320) 552960 ['activation_70[0][0]']

conv2d_75 (Conv2D) (None, None, None, 192) 331776 ['activation_74[0][0]']

batch_normalization_71 (Ba (None, None, None, 320) 960 ['conv2d_71[0][0]']

tchNormalization)

batch_normalization_75 (Ba (None, None, None, 192) 576 ['conv2d_75[0][0]']

tchNormalization)

activation_71 (Activation) (None, None, None, 320) 0 ['batch_normalization_71[0][0]

']

activation_75 (Activation) (None, None, None, 192) 0 ['batch_normalization_75[0][0]

']

max_pooling2d_3 (MaxPoolin (None, None, None, 768) 0 ['mixed7[0][0]']

g2D)

mixed8 (Concatenate) (None, None, None, 1280) 0 ['activation_71[0][0]',

'activation_75[0][0]',

'max_pooling2d_3[0][0]']

conv2d_80 (Conv2D) (None, None, None, 448) 573440 ['mixed8[0][0]']

batch_normalization_80 (Ba (None, None, None, 448) 1344 ['conv2d_80[0][0]']

tchNormalization)

activation_80 (Activation) (None, None, None, 448) 0 ['batch_normalization_80[0][0]

']

conv2d_77 (Conv2D) (None, None, None, 384) 491520 ['mixed8[0][0]']

conv2d_81 (Conv2D) (None, None, None, 384) 1548288 ['activation_80[0][0]']

batch_normalization_77 (Ba (None, None, None, 384) 1152 ['conv2d_77[0][0]']

tchNormalization)

batch_normalization_81 (Ba (None, None, None, 384) 1152 ['conv2d_81[0][0]']

tchNormalization)

activation_77 (Activation) (None, None, None, 384) 0 ['batch_normalization_77[0][0]

']

activation_81 (Activation) (None, None, None, 384) 0 ['batch_normalization_81[0][0]

']

conv2d_78 (Conv2D) (None, None, None, 384) 442368 ['activation_77[0][0]']

conv2d_79 (Conv2D) (None, None, None, 384) 442368 ['activation_77[0][0]']

conv2d_82 (Conv2D) (None, None, None, 384) 442368 ['activation_81[0][0]']

conv2d_83 (Conv2D) (None, None, None, 384) 442368 ['activation_81[0][0]']

average_pooling2d_7 (Avera (None, None, None, 1280) 0 ['mixed8[0][0]']

gePooling2D)

conv2d_76 (Conv2D) (None, None, None, 320) 409600 ['mixed8[0][0]']

batch_normalization_78 (Ba (None, None, None, 384) 1152 ['conv2d_78[0][0]']

tchNormalization)

batch_normalization_79 (Ba (None, None, None, 384) 1152 ['conv2d_79[0][0]']

tchNormalization)

batch_normalization_82 (Ba (None, None, None, 384) 1152 ['conv2d_82[0][0]']

tchNormalization)

batch_normalization_83 (Ba (None, None, None, 384) 1152 ['conv2d_83[0][0]']

tchNormalization)

conv2d_84 (Conv2D) (None, None, None, 192) 245760 ['average_pooling2d_7[0][0]']

batch_normalization_76 (Ba (None, None, None, 320) 960 ['conv2d_76[0][0]']

tchNormalization)

activation_78 (Activation) (None, None, None, 384) 0 ['batch_normalization_78[0][0]

']

activation_79 (Activation) (None, None, None, 384) 0 ['batch_normalization_79[0][0]

']

activation_82 (Activation) (None, None, None, 384) 0 ['batch_normalization_82[0][0]

']

activation_83 (Activation) (None, None, None, 384) 0 ['batch_normalization_83[0][0]

']

batch_normalization_84 (Ba (None, None, None, 192) 576 ['conv2d_84[0][0]']

tchNormalization)

activation_76 (Activation) (None, None, None, 320) 0 ['batch_normalization_76[0][0]

']

mixed9_0 (Concatenate) (None, None, None, 768) 0 ['activation_78[0][0]',

'activation_79[0][0]']

concatenate (Concatenate) (None, None, None, 768) 0 ['activation_82[0][0]',

'activation_83[0][0]']

activation_84 (Activation) (None, None, None, 192) 0 ['batch_normalization_84[0][0]

']

mixed9 (Concatenate) (None, None, None, 2048) 0 ['activation_76[0][0]',

'mixed9_0[0][0]',

'concatenate[0][0]',

'activation_84[0][0]']

conv2d_86 (Conv2D) (None, None, None, 384) 786432 ['mixed9[0][0]']

batch_normalization_86 (Ba (None, None, None, 384) 1152 ['conv2d_86[0][0]']

tchNormalization)

activation_86 (Activation) (None, None, None, 384) 0 ['batch_normalization_86[0][0]

']

conv2d_87 (Conv2D) (None, None, None, 384) 442368 ['activation_86[0][0]']

==================================================================================================

Total params: 16952672 (64.67 MB)

Trainable params: 0 (0.00 Byte)

Non-trainable params: 16952672 (64.67 MB)

__________________________________________________________________________________________________

Calculate style loss

The style loss is the average of the squared differences between the features and targets.

def get_style_loss(features, targets):

"""Expects two images of dimension h, w, c

Args:

features: tensor with shape: (height, width, channels)

targets: tensor with shape: (height, width, channels)

Returns:

style loss (scalar)

"""

### START CODE HERE ###

# Calculate the style loss

style_loss = tf.reduce_mean(tf.square(features - targets))

### END CODE HERE ###

return style_loss

Calculate content loss

Calculate the sum of the squared error between the features and targets, then multiply by a scaling factor (0.5).

def get_content_loss(features, targets):

"""Expects two images of dimension h, w, c

Args:

features: tensor with shape: (height, width, channels)

targets: tensor with shape: (height, width, channels)

Returns:

content loss (scalar)

"""

# get the sum of the squared error multiplied by a scaling factor

content_loss = 0.5 * tf.reduce_sum(tf.square(features - targets))

return content_loss

Calculate the gram matrix

Use tf.linalg.einsum to calculate the gram matrix for an input tensor.

- In addition, calculate the scaling factor

num_locationsand divide the gram matrix calculation bynum_locations.

$$ \text{num locations} = height \times width $$

def gram_matrix(input_tensor):

""" Calculates the gram matrix and divides by the number of locations

Args:

input_tensor: tensor of shape (batch, height, width, channels)

Returns:

scaled_gram: gram matrix divided by the number of locations

"""

# calculate the gram matrix of the input tensor

gram = tf.linalg.einsum('bijc,bijd->bcd', input_tensor, input_tensor)

# get the height and width of the input tensor

input_shape = tf.shape(input_tensor)

height = input_shape[1]

width = input_shape[2]

# get the number of locations (height times width), and cast it as a tf.float32

num_locations = tf.cast(height * width, tf.float32)

# scale the gram matrix by dividing by the number of locations

scaled_gram = gram / num_locations

return scaled_gram

Get the style image features

Given the style image as input, you’ll get the style features of the inception model that you just created using inception_model().

- You’ll first preprocess the image using the given

preprocess_imagefunction. - You’ll then get the outputs of the model.

- From the outputs, just get the style feature layers and not the content feature layer.

You can run the following code to check the order of the layers in your inception model:

tmp_layer_list = [layer.output for layer in inception.layers]

tmp_layer_list

[<KerasTensor: shape=(None, None, None, 3) dtype=float32 (created by layer 'input_1')>,

<KerasTensor: shape=(None, None, None, 32) dtype=float32 (created by layer 'conv2d')>,

<KerasTensor: shape=(None, None, None, 32) dtype=float32 (created by layer 'batch_normalization')>,

<KerasTensor: shape=(None, None, None, 32) dtype=float32 (created by layer 'activation')>,

<KerasTensor: shape=(None, None, None, 32) dtype=float32 (created by layer 'conv2d_1')>,

<KerasTensor: shape=(None, None, None, 32) dtype=float32 (created by layer 'batch_normalization_1')>,

<KerasTensor: shape=(None, None, None, 32) dtype=float32 (created by layer 'activation_1')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'conv2d_2')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'batch_normalization_2')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'activation_2')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'max_pooling2d')>,

<KerasTensor: shape=(None, None, None, 80) dtype=float32 (created by layer 'conv2d_3')>,

<KerasTensor: shape=(None, None, None, 80) dtype=float32 (created by layer 'batch_normalization_3')>,

<KerasTensor: shape=(None, None, None, 80) dtype=float32 (created by layer 'activation_3')>,

<KerasTensor: shape=(None, None, None, 192) dtype=float32 (created by layer 'conv2d_4')>,

<KerasTensor: shape=(None, None, None, 192) dtype=float32 (created by layer 'batch_normalization_4')>,

<KerasTensor: shape=(None, None, None, 192) dtype=float32 (created by layer 'activation_4')>,

<KerasTensor: shape=(None, None, None, 192) dtype=float32 (created by layer 'max_pooling2d_1')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'conv2d_8')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'batch_normalization_8')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'activation_8')>,

<KerasTensor: shape=(None, None, None, 48) dtype=float32 (created by layer 'conv2d_6')>,

<KerasTensor: shape=(None, None, None, 96) dtype=float32 (created by layer 'conv2d_9')>,

<KerasTensor: shape=(None, None, None, 48) dtype=float32 (created by layer 'batch_normalization_6')>,

<KerasTensor: shape=(None, None, None, 96) dtype=float32 (created by layer 'batch_normalization_9')>,

<KerasTensor: shape=(None, None, None, 48) dtype=float32 (created by layer 'activation_6')>,

<KerasTensor: shape=(None, None, None, 96) dtype=float32 (created by layer 'activation_9')>,

<KerasTensor: shape=(None, None, None, 192) dtype=float32 (created by layer 'average_pooling2d')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'conv2d_5')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'conv2d_7')>,

<KerasTensor: shape=(None, None, None, 96) dtype=float32 (created by layer 'conv2d_10')>,

<KerasTensor: shape=(None, None, None, 32) dtype=float32 (created by layer 'conv2d_11')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'batch_normalization_5')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'batch_normalization_7')>,

<KerasTensor: shape=(None, None, None, 96) dtype=float32 (created by layer 'batch_normalization_10')>,

<KerasTensor: shape=(None, None, None, 32) dtype=float32 (created by layer 'batch_normalization_11')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'activation_5')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'activation_7')>,

<KerasTensor: shape=(None, None, None, 96) dtype=float32 (created by layer 'activation_10')>,

<KerasTensor: shape=(None, None, None, 32) dtype=float32 (created by layer 'activation_11')>,

<KerasTensor: shape=(None, None, None, 256) dtype=float32 (created by layer 'mixed0')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'conv2d_15')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'batch_normalization_15')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'activation_15')>,

<KerasTensor: shape=(None, None, None, 48) dtype=float32 (created by layer 'conv2d_13')>,

<KerasTensor: shape=(None, None, None, 96) dtype=float32 (created by layer 'conv2d_16')>,

<KerasTensor: shape=(None, None, None, 48) dtype=float32 (created by layer 'batch_normalization_13')>,

<KerasTensor: shape=(None, None, None, 96) dtype=float32 (created by layer 'batch_normalization_16')>,

<KerasTensor: shape=(None, None, None, 48) dtype=float32 (created by layer 'activation_13')>,

<KerasTensor: shape=(None, None, None, 96) dtype=float32 (created by layer 'activation_16')>,

<KerasTensor: shape=(None, None, None, 256) dtype=float32 (created by layer 'average_pooling2d_1')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'conv2d_12')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'conv2d_14')>,

<KerasTensor: shape=(None, None, None, 96) dtype=float32 (created by layer 'conv2d_17')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'conv2d_18')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'batch_normalization_12')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'batch_normalization_14')>,

<KerasTensor: shape=(None, None, None, 96) dtype=float32 (created by layer 'batch_normalization_17')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'batch_normalization_18')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'activation_12')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'activation_14')>,

<KerasTensor: shape=(None, None, None, 96) dtype=float32 (created by layer 'activation_17')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'activation_18')>,

<KerasTensor: shape=(None, None, None, 288) dtype=float32 (created by layer 'mixed1')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'conv2d_22')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'batch_normalization_22')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'activation_22')>,

<KerasTensor: shape=(None, None, None, 48) dtype=float32 (created by layer 'conv2d_20')>,

<KerasTensor: shape=(None, None, None, 96) dtype=float32 (created by layer 'conv2d_23')>,

<KerasTensor: shape=(None, None, None, 48) dtype=float32 (created by layer 'batch_normalization_20')>,

<KerasTensor: shape=(None, None, None, 96) dtype=float32 (created by layer 'batch_normalization_23')>,

<KerasTensor: shape=(None, None, None, 48) dtype=float32 (created by layer 'activation_20')>,

<KerasTensor: shape=(None, None, None, 96) dtype=float32 (created by layer 'activation_23')>,

<KerasTensor: shape=(None, None, None, 288) dtype=float32 (created by layer 'average_pooling2d_2')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'conv2d_19')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'conv2d_21')>,

<KerasTensor: shape=(None, None, None, 96) dtype=float32 (created by layer 'conv2d_24')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'conv2d_25')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'batch_normalization_19')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'batch_normalization_21')>,

<KerasTensor: shape=(None, None, None, 96) dtype=float32 (created by layer 'batch_normalization_24')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'batch_normalization_25')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'activation_19')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'activation_21')>,

<KerasTensor: shape=(None, None, None, 96) dtype=float32 (created by layer 'activation_24')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'activation_25')>,

<KerasTensor: shape=(None, None, None, 288) dtype=float32 (created by layer 'mixed2')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'conv2d_27')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'batch_normalization_27')>,

<KerasTensor: shape=(None, None, None, 64) dtype=float32 (created by layer 'activation_27')>,

<KerasTensor: shape=(None, None, None, 96) dtype=float32 (created by layer 'conv2d_28')>,

<KerasTensor: shape=(None, None, None, 96) dtype=float32 (created by layer 'batch_normalization_28')>,

<KerasTensor: shape=(None, None, None, 96) dtype=float32 (created by layer 'activation_28')>,

<KerasTensor: shape=(None, None, None, 384) dtype=float32 (created by layer 'conv2d_26')>,

<KerasTensor: shape=(None, None, None, 96) dtype=float32 (created by layer 'conv2d_29')>,

<KerasTensor: shape=(None, None, None, 384) dtype=float32 (created by layer 'batch_normalization_26')>,

<KerasTensor: shape=(None, None, None, 96) dtype=float32 (created by layer 'batch_normalization_29')>,

<KerasTensor: shape=(None, None, None, 384) dtype=float32 (created by layer 'activation_26')>,

<KerasTensor: shape=(None, None, None, 96) dtype=float32 (created by layer 'activation_29')>,

<KerasTensor: shape=(None, None, None, 288) dtype=float32 (created by layer 'max_pooling2d_2')>,

<KerasTensor: shape=(None, None, None, 768) dtype=float32 (created by layer 'mixed3')>,

<KerasTensor: shape=(None, None, None, 128) dtype=float32 (created by layer 'conv2d_34')>,

<KerasTensor: shape=(None, None, None, 128) dtype=float32 (created by layer 'batch_normalization_34')>,

<KerasTensor: shape=(None, None, None, 128) dtype=float32 (created by layer 'activation_34')>,

<KerasTensor: shape=(None, None, None, 128) dtype=float32 (created by layer 'conv2d_35')>,

<KerasTensor: shape=(None, None, None, 128) dtype=float32 (created by layer 'batch_normalization_35')>,

<KerasTensor: shape=(None, None, None, 128) dtype=float32 (created by layer 'activation_35')>,

<KerasTensor: shape=(None, None, None, 128) dtype=float32 (created by layer 'conv2d_31')>,

<KerasTensor: shape=(None, None, None, 128) dtype=float32 (created by layer 'conv2d_36')>,

<KerasTensor: shape=(None, None, None, 128) dtype=float32 (created by layer 'batch_normalization_31')>,

<KerasTensor: shape=(None, None, None, 128) dtype=float32 (created by layer 'batch_normalization_36')>,

<KerasTensor: shape=(None, None, None, 128) dtype=float32 (created by layer 'activation_31')>,

<KerasTensor: shape=(None, None, None, 128) dtype=float32 (created by layer 'activation_36')>,

<KerasTensor: shape=(None, None, None, 128) dtype=float32 (created by layer 'conv2d_32')>,

<KerasTensor: shape=(None, None, None, 128) dtype=float32 (created by layer 'conv2d_37')>,

<KerasTensor: shape=(None, None, None, 128) dtype=float32 (created by layer 'batch_normalization_32')>,

<KerasTensor: shape=(None, None, None, 128) dtype=float32 (created by layer 'batch_normalization_37')>,

<KerasTensor: shape=(None, None, None, 128) dtype=float32 (created by layer 'activation_32')>,

<KerasTensor: shape=(None, None, None, 128) dtype=float32 (created by layer 'activation_37')>,