Coursera

Ungraded Lab: MNIST Deep Autoencoder

Welcome back! In this lab, you will extend the shallow autoencoder you built in the previous exercise. The model here will have a deeper network so it can handle more complex images.

Imports

try:

# %tensorflow_version only exists in Colab.

%tensorflow_version 2.x

except Exception:

pass

import tensorflow as tf

import tensorflow_datasets as tfds

import numpy as np

import matplotlib.pyplot as plt

Colab only includes TensorFlow 2.x; %tensorflow_version has no effect.

Prepare the Dataset

You will prepare the MNIST dataset just like in the previous lab.

def map_image(image, label):

'''Normalizes and flattens the image. Returns image as input and label.'''

image = tf.cast(image, dtype=tf.float32)

image = image / 255.0

image = tf.reshape(image, shape=(784,))

return image, image

# Load the train and test sets from TFDS

BATCH_SIZE = 128

SHUFFLE_BUFFER_SIZE = 1024

train_dataset = tfds.load('mnist', as_supervised=True, split="train")

train_dataset = train_dataset.map(map_image)

train_dataset = train_dataset.shuffle(SHUFFLE_BUFFER_SIZE).batch(BATCH_SIZE).repeat()

test_dataset = tfds.load('mnist', as_supervised=True, split="test")

test_dataset = test_dataset.map(map_image)

test_dataset = test_dataset.batch(BATCH_SIZE).repeat()

Downloading and preparing dataset 11.06 MiB (download: 11.06 MiB, generated: 21.00 MiB, total: 32.06 MiB) to /root/tensorflow_datasets/mnist/3.0.1...

Dl Completed...: 0%| | 0/5 [00:00<?, ? file/s]

Dataset mnist downloaded and prepared to /root/tensorflow_datasets/mnist/3.0.1. Subsequent calls will reuse this data.

Build the Model

As mentioned, you will have a deeper network for the autoencoder. Compare the layers here with that of the shallow network you built in the previous lab.

def deep_autoencoder():

'''Builds the encoder and decoder using Dense layers.'''

encoder = tf.keras.layers.Dense(units=128, activation='relu')(inputs)

encoder = tf.keras.layers.Dense(units=64, activation='relu')(encoder)

encoder = tf.keras.layers.Dense(units=32, activation='relu')(encoder)

decoder = tf.keras.layers.Dense(units=64, activation='relu')(encoder)

decoder = tf.keras.layers.Dense(units=128, activation='relu')(decoder)

decoder = tf.keras.layers.Dense(units=784, activation='sigmoid')(decoder)

return encoder, decoder

# set the input tensor

inputs = tf.keras.layers.Input(shape=(784,))

# get the encoder and decoder output

deep_encoder_output, deep_autoencoder_output = deep_autoencoder()

# setup the encoder because you will visualize its output later

deep_encoder_model = tf.keras.Model(inputs=inputs, outputs=deep_encoder_output)

# setup the autoencoder

deep_autoencoder_model = tf.keras.Model(inputs=inputs, outputs=deep_autoencoder_output)

Compile and Train the Model

train_steps = 60000 // BATCH_SIZE

deep_autoencoder_model.compile(optimizer=tf.keras.optimizers.Adam(), loss='binary_crossentropy')

deep_auto_history = deep_autoencoder_model.fit(train_dataset, steps_per_epoch=train_steps, epochs=50)

Epoch 1/50

468/468 [==============================] - 22s 23ms/step - loss: 0.1973

Epoch 2/50

468/468 [==============================] - 6s 14ms/step - loss: 0.1278

Epoch 3/50

468/468 [==============================] - 6s 12ms/step - loss: 0.1168

Epoch 4/50

468/468 [==============================] - 6s 12ms/step - loss: 0.1100

Epoch 5/50

468/468 [==============================] - 4s 9ms/step - loss: 0.1059

Epoch 6/50

468/468 [==============================] - 6s 12ms/step - loss: 0.1027

Epoch 7/50

468/468 [==============================] - 4s 9ms/step - loss: 0.1002

Epoch 8/50

468/468 [==============================] - 4s 8ms/step - loss: 0.0982

Epoch 9/50

468/468 [==============================] - 5s 10ms/step - loss: 0.0964

Epoch 10/50

468/468 [==============================] - 4s 8ms/step - loss: 0.0949

Epoch 11/50

468/468 [==============================] - 4s 8ms/step - loss: 0.0937

Epoch 12/50

468/468 [==============================] - 6s 12ms/step - loss: 0.0928

Epoch 13/50

468/468 [==============================] - 4s 8ms/step - loss: 0.0918

Epoch 14/50

468/468 [==============================] - 4s 8ms/step - loss: 0.0911

Epoch 15/50

468/468 [==============================] - 5s 10ms/step - loss: 0.0904

Epoch 16/50

468/468 [==============================] - 4s 8ms/step - loss: 0.0898

Epoch 17/50

468/468 [==============================] - 4s 8ms/step - loss: 0.0891

Epoch 18/50

468/468 [==============================] - 4s 10ms/step - loss: 0.0885

Epoch 19/50

468/468 [==============================] - 4s 9ms/step - loss: 0.0879

Epoch 20/50

468/468 [==============================] - 4s 8ms/step - loss: 0.0874

Epoch 21/50

468/468 [==============================] - 4s 9ms/step - loss: 0.0869

Epoch 22/50

468/468 [==============================] - 4s 9ms/step - loss: 0.0866

Epoch 23/50

468/468 [==============================] - 4s 8ms/step - loss: 0.0862

Epoch 24/50

468/468 [==============================] - 4s 8ms/step - loss: 0.0860

Epoch 25/50

468/468 [==============================] - 5s 11ms/step - loss: 0.0856

Epoch 26/50

468/468 [==============================] - 4s 8ms/step - loss: 0.0855

Epoch 27/50

468/468 [==============================] - 4s 8ms/step - loss: 0.0853

Epoch 28/50

468/468 [==============================] - 5s 10ms/step - loss: 0.0850

Epoch 29/50

468/468 [==============================] - 4s 8ms/step - loss: 0.0848

Epoch 30/50

468/468 [==============================] - 3s 7ms/step - loss: 0.0847

Epoch 31/50

468/468 [==============================] - 5s 11ms/step - loss: 0.0846

Epoch 32/50

468/468 [==============================] - 4s 7ms/step - loss: 0.0844

Epoch 33/50

468/468 [==============================] - 4s 8ms/step - loss: 0.0843

Epoch 34/50

468/468 [==============================] - 4s 9ms/step - loss: 0.0842

Epoch 35/50

468/468 [==============================] - 4s 8ms/step - loss: 0.0841

Epoch 36/50

468/468 [==============================] - 4s 9ms/step - loss: 0.0840

Epoch 37/50

468/468 [==============================] - 4s 8ms/step - loss: 0.0838

Epoch 38/50

468/468 [==============================] - 5s 10ms/step - loss: 0.0837

Epoch 39/50

468/468 [==============================] - 4s 8ms/step - loss: 0.0837

Epoch 40/50

468/468 [==============================] - 4s 8ms/step - loss: 0.0836

Epoch 41/50

468/468 [==============================] - 5s 10ms/step - loss: 0.0835

Epoch 42/50

468/468 [==============================] - 4s 8ms/step - loss: 0.0834

Epoch 43/50

468/468 [==============================] - 4s 8ms/step - loss: 0.0833

Epoch 44/50

468/468 [==============================] - 5s 10ms/step - loss: 0.0833

Epoch 45/50

468/468 [==============================] - 4s 8ms/step - loss: 0.0832

Epoch 46/50

468/468 [==============================] - 5s 10ms/step - loss: 0.0831

Epoch 47/50

468/468 [==============================] - 4s 9ms/step - loss: 0.0830

Epoch 48/50

468/468 [==============================] - 4s 8ms/step - loss: 0.0829

Epoch 49/50

468/468 [==============================] - 4s 8ms/step - loss: 0.0829

Epoch 50/50

468/468 [==============================] - 4s 9ms/step - loss: 0.0828

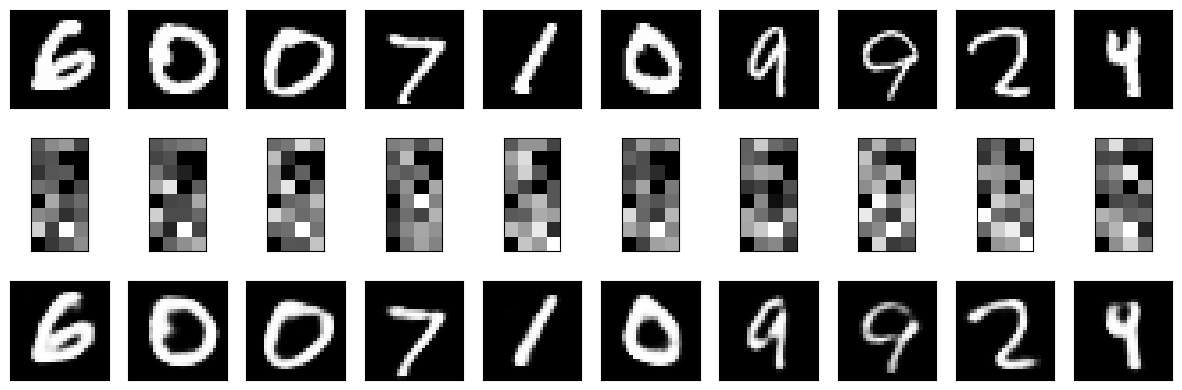

Display sample results

See the results using the model you just trained.

def display_one_row(disp_images, offset, shape=(28, 28)):

'''Display sample outputs in one row.'''

for idx, test_image in enumerate(disp_images):

plt.subplot(3, 10, offset + idx + 1)

plt.xticks([])

plt.yticks([])

test_image = np.reshape(test_image, shape)

plt.imshow(test_image, cmap='gray')

def display_results(disp_input_images, disp_encoded, disp_predicted, enc_shape=(8,4)):

'''Displays the input, encoded, and decoded output values.'''

plt.figure(figsize=(15, 5))

display_one_row(disp_input_images, 0, shape=(28,28,))

display_one_row(disp_encoded, 10, shape=enc_shape)

display_one_row(disp_predicted, 20, shape=(28,28,))

# take 1 batch of the dataset

test_dataset = test_dataset.take(1)

# take the input images and put them in a list

output_samples = []

for input_image, image in tfds.as_numpy(test_dataset):

output_samples = input_image

# pick 10 random numbers to be used as indices to the list above

idxs = np.random.choice(BATCH_SIZE, size=10)

# get the encoder output

encoded_predicted = deep_encoder_model.predict(test_dataset)

# get a prediction for the test batch

deep_predicted = deep_autoencoder_model.predict(test_dataset)

# display the 10 samples, encodings and decoded values!

display_results(output_samples[idxs], encoded_predicted[idxs], deep_predicted[idxs])

1/1 [==============================] - 0s 180ms/step

1/1 [==============================] - 0s 113ms/step